What Is Data Scraping? Definition, Uses, and Key Considerations

What Is Data Scraping? Definition, Uses, and Key Considerations

Overview

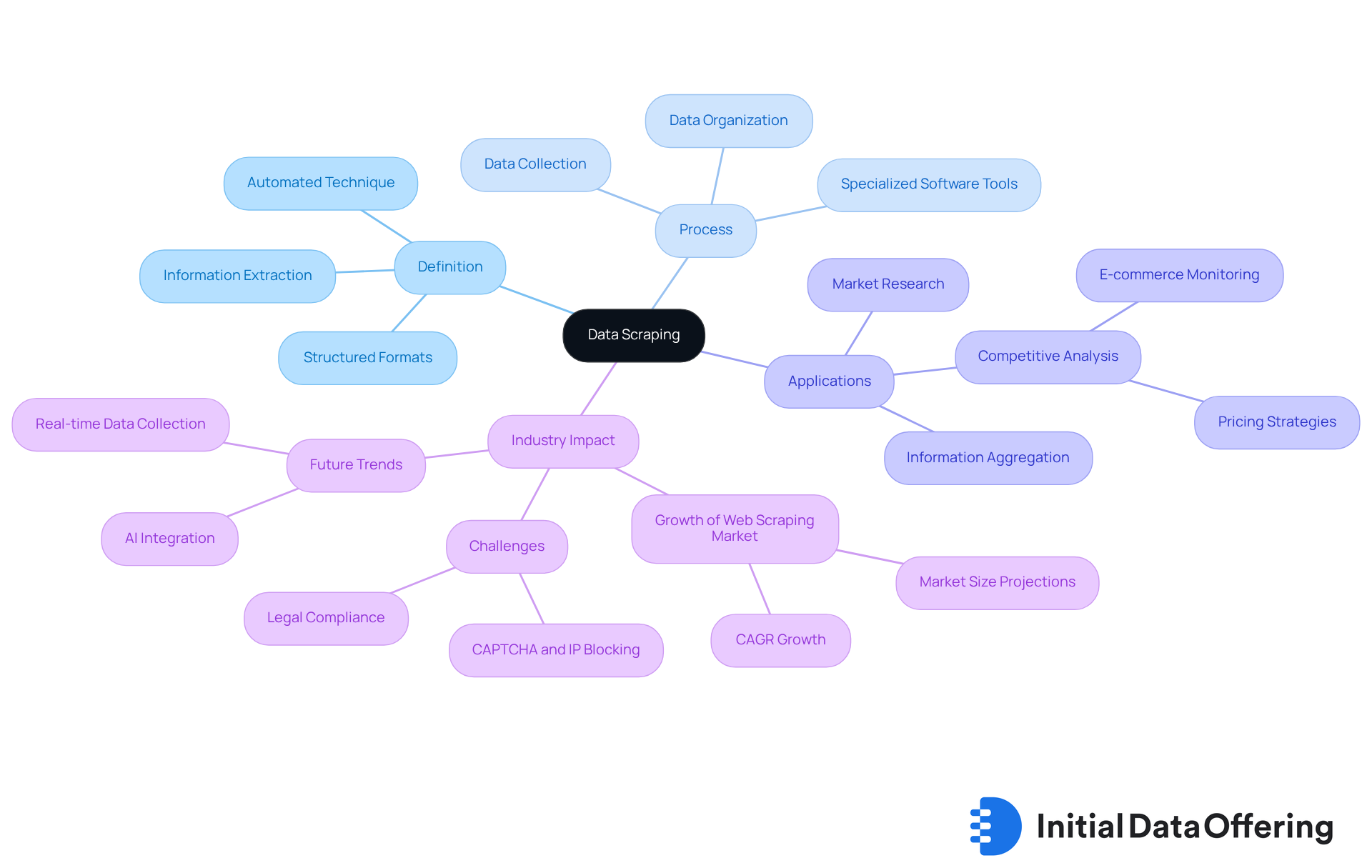

Data scraping, commonly referred to as web scraping, serves as an automated technique for extracting structured information from websites. This process enables users to compile and analyze extensive data efficiently, which is particularly advantageous in today's data-driven landscape. In various industries, especially e-commerce, firms leverage data scraping for competitive analysis and market insights. This highlights its essential role in modern business strategies, as it provides firms with the ability to make informed decisions based on comprehensive data analysis.

How might your organization benefit from implementing data scraping techniques to enhance its competitive edge?

Introduction

Understanding the intricacies of data scraping is essential in today’s data-driven landscape. Businesses seek to harness the power of information for strategic advantage, and this automated technique—often referred to as web scraping—enables the extraction of structured data from various digital platforms. This capability facilitates applications across diverse industries such as e-commerce, finance, and healthcare.

However, as organizations increasingly rely on this method, they face a complex web of ethical and legal considerations that challenge their data collection efforts. How can companies navigate these challenges? Leveraging the immense potential of data scraping can enhance their competitive edge, but it requires a thoughtful approach to the ethical implications involved.

Ultimately, the successful integration of data scraping into business strategies hinges on understanding both its capabilities and the responsibilities that come with it.

Define Data Scraping: Understanding Its Core Concept

What is data scraping? It is an automated technique, often referred to as web scraping, designed for extracting structured information from websites and other digital platforms. This process utilizes specialized software tools or scripts to gather information that is typically presented in a human-readable format. Once extracted, the data can be organized into structured formats such as spreadsheets or databases, which facilitates further analysis. This capability allows users to efficiently compile extensive amounts of information, crucial for various applications, including market research, competitive analysis, and information aggregation.

In 2025, a significant number of enterprises are leveraging information extraction for competitive analysis, particularly in e-commerce, where firms frequently employ this method to monitor competitor pricing and product listings. For example, a mid-sized e-commerce retailer reported a remarkable 300% increase in ROI on promotional campaigns after implementing a custom data extraction system to track competitor prices multiple times a day. This practice has become vital for maintaining competitiveness in the rapidly evolving online retail landscape.

Industry leaders underscore the importance of information extraction in modern business strategies. As one specialist pointed out, "If your information strategy doesn’t incorporate gathering yet, you’re not just lagging behind—you’re already unnoticed in your market." This statement highlights the critical role that plays in enabling businesses to remain informed and adaptable in their decision-making processes. Overall, web extraction serves as an essential resource for organizations aiming to utilize information for strategic advantage.

Contextualize Data Scraping: Applications and Industry Relevance

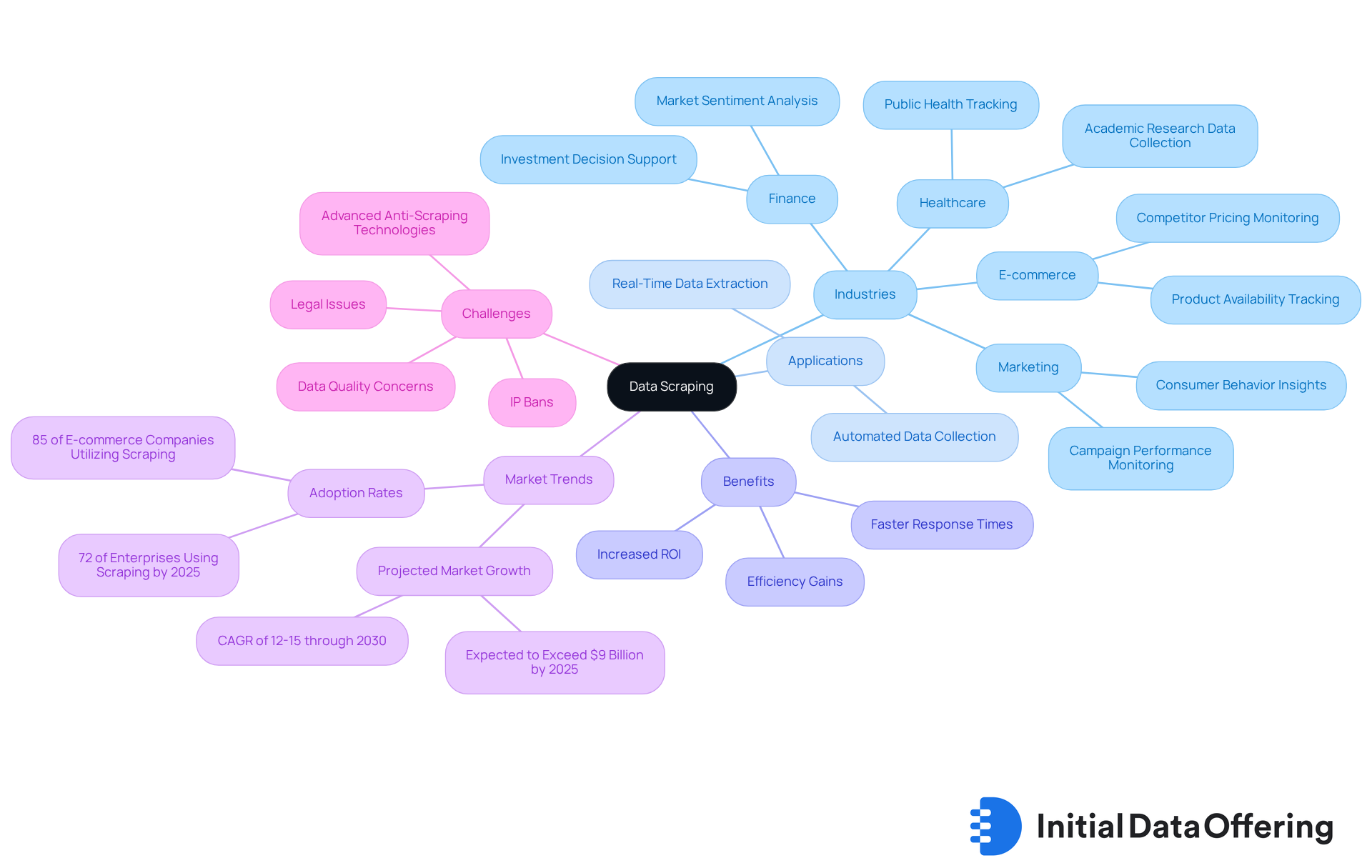

What is has emerged as a vital practice in data extraction across various industries, including e-commerce, finance, healthcare, and marketing. In the e-commerce sector, over 85% of firms leverage information extraction to monitor competitor pricing and product availability. This capability enables them to swiftly adjust their strategies, thereby maintaining a competitive edge. In finance, analysts utilize data collection methods to extract information from news sources and social media platforms, facilitating the evaluation of market sentiment and informed investment decisions. For example, a mid-sized e-commerce retailer implemented a custom data extraction system that monitored competitor websites every 15 minutes, resulting in an impressive 300% increase in ROI on promotional campaigns. Moreover, understanding what is data scraping has led to a decrease in the average response time to market changes for retailers from days to hours following the adoption of web data extraction technologies, underscoring the efficiency gains achieved through automation.

Healthcare researchers also benefit from information collection, as it allows them to compile extensive datasets for academic studies, enhancing the quality and breadth of their analyses. The automation of information collection processes significantly reduces the time and effort required to gather insights, transforming information extraction into a fundamental business capability. As industries become increasingly reliant on real-time insights, knowing what is data scraping has evolved into a crucial tool for efficiently collecting and analyzing data in today’s data-centric environment. Notably, 72% of mid-to-large enterprises and 85% of e-commerce companies are projected to implement web data extraction for competitive monitoring by 2025, highlighting its strategic importance. Additionally, the global web data extraction market is anticipated to surpass $9 billion by 2025, reflecting the growing dependence on this practice. However, businesses must also address challenges such as advanced anti-scraping technologies, IP bans, legal issues, and quality concerns to ensure successful implementation.

Examine Ethical and Legal Frameworks: Navigating Data Scraping Responsibilities

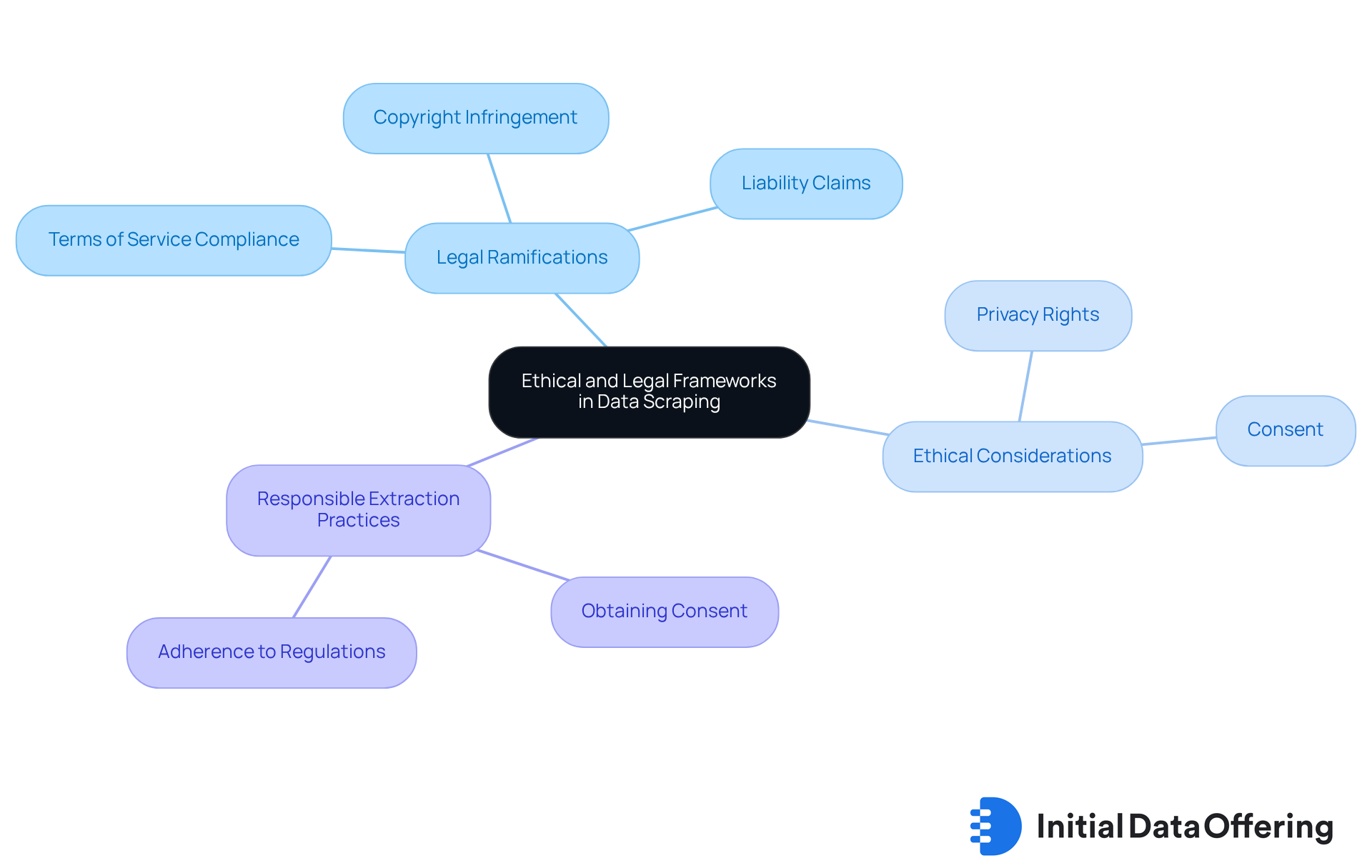

The ethical and legal ramifications of information harvesting present a complex landscape that varies across jurisdictions. Collecting publicly accessible information is generally permissible; however, compliance with the terms of service of the relevant websites is crucial. Violating these terms can lead to significant , including lawsuits for copyright infringement or breach of contract.

For instance, courts may interpret violations of terms of service as evidence of unauthorized access, which can impact liability claims, even when the scraped content is publicly available. Furthermore, ethical considerations gain prominence when personal information is involved, as this can infringe upon individuals' privacy rights.

Organizations must adopt responsible extraction practices, such as:

- Obtaining consent when necessary

- Ensuring adherence to protection regulations like the GDPR and CCPA

Statistics reveal a concerning trend: GDPR breaches related to information extraction are on the rise, highlighting the necessity for vigilance. By carefully navigating these legal frameworks, businesses can mitigate risks and uphold ethical standards in their information collection efforts. This approach ultimately fosters trust and accountability in their operations, which is essential in today’s data-driven environment.

Explore Technical Methods: How Data Scraping Works

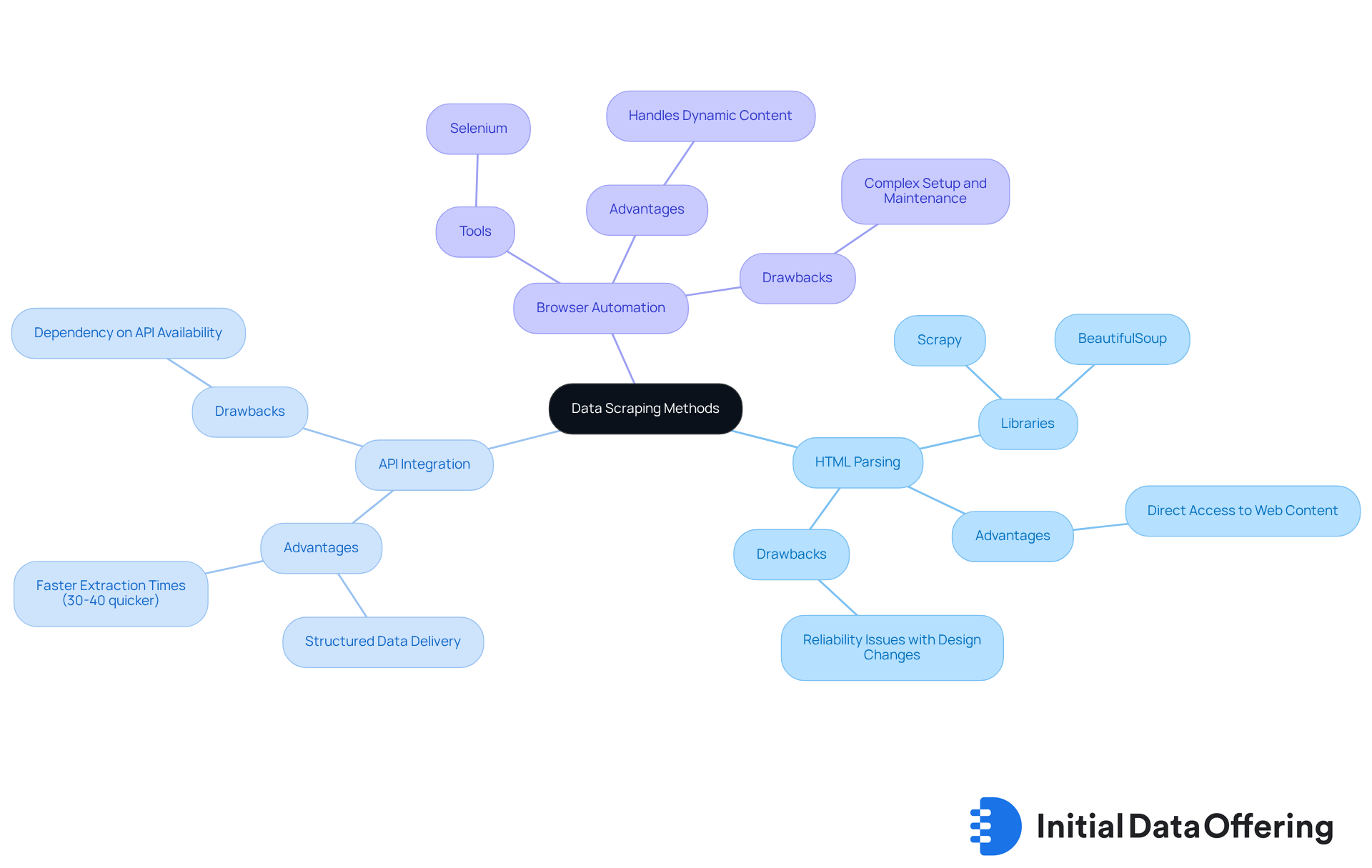

Data extraction can be achieved through various methods, including HTML parsing, API integration, and browser automation. Each of these approaches offers unique features that cater to different needs in data collection.

- HTML parsing entails retrieving information directly from the HTML structure of a webpage, utilizing libraries like BeautifulSoup or Scrapy in Python. This method allows for direct access to the webpage's content, but it can be less reliable due to changes in webpage design.

- On the other hand, API integration enables users to obtain organized information offered by platforms via their application programming interfaces. This method is frequently more dependable and effective than extracting HTML. Statistics show that AI-driven collection provides 30-40% quicker information extraction times, making API integration a favored option for many developers. The advantage of this method lies in its structured data delivery, which enhances the efficiency of data processing.

- Browser automation tools such as Selenium allow users to mimic human browsing actions, facilitating the retrieval of information from dynamic sites that depend on JavaScript. Selenium can mimic user navigation for extracting information that loads dynamically on websites, emphasizing its particular usefulness in managing dynamic content.

Each approach has its benefits and drawbacks, and the selection of technique frequently relies on the particular needs of the data extraction task at hand.

As one engineer mentioned, 'Selecting the appropriate tool is essential for enhancing efficiency and precision in information gathering.' Companies that invest in these capabilities now will have a competitive advantage in the data-driven economy. However, it is also important to note that the legality of web data extraction varies by jurisdiction and can depend on terms of service. Understanding these methods is essential for effectively leveraging what is across various applications.

How can these techniques be integrated into your current workflows to improve data collection and analysis?

Conclusion

Data scraping is a pivotal technique in the digital landscape, enabling the extraction of structured information from various online sources. This automated process enhances data collection efficiency and empowers businesses to make informed decisions based on real-time insights. Understanding the essence of data scraping is crucial for organizations aiming to maintain a competitive edge in an increasingly data-driven world.

Key insights throughout the article highlight the diverse applications of data scraping across industries such as e-commerce, finance, and healthcare. The significant ROI achieved by businesses through strategic data extraction practices illustrates its importance in market analysis and decision-making. Additionally, the ethical and legal frameworks surrounding data scraping emphasize the need for responsible practices to uphold privacy rights and comply with regulations.

As industries continue to evolve and rely on data, embracing data scraping techniques becomes essential for organizations seeking to thrive. By investing in effective data extraction methods and adhering to ethical standards, businesses can harness the power of information to drive innovation and success in their respective fields. The call to action is clear: prioritize data scraping as a fundamental capability to navigate the complexities of the modern business environment.

Frequently Asked Questions

What is data scraping?

Data scraping, also known as web scraping, is an automated technique used to extract structured information from websites and digital platforms using specialized software tools or scripts.

How does data scraping work?

Data scraping works by gathering information that is typically presented in a human-readable format and organizing it into structured formats such as spreadsheets or databases for further analysis.

What are the applications of data scraping?

Data scraping is crucial for various applications, including market research, competitive analysis, and information aggregation.

How are enterprises using data scraping in 2025?

In 2025, many enterprises are leveraging information extraction for competitive analysis, particularly in e-commerce, to monitor competitor pricing and product listings.

What impact can data scraping have on e-commerce businesses?

For example, a mid-sized e-commerce retailer reported a 300% increase in ROI on promotional campaigns after implementing a custom data extraction system to track competitor prices multiple times a day.

Why is information extraction important for businesses?

Information extraction is critical for modern business strategies as it enables organizations to remain informed and adaptable in their decision-making processes, helping them maintain competitiveness in the market.