Build Your ETL Pipeline: A Step-by-Step Guide for Analysts

Build Your ETL Pipeline: A Step-by-Step Guide for Analysts

Overview

The article presents a comprehensive step-by-step guide for analysts on constructing an effective ETL (Extract, Transform, Load) pipeline, underscoring its critical function in data integration and informed decision-making. Each phase of the ETL process is examined, from the identification of information sources to the automation and monitoring of the pipeline. Common challenges, such as information quality and scalability, are addressed, illustrating how a well-implemented ETL pipeline can significantly enhance analytical capabilities. This guide not only emphasizes the importance of these phases but also invites analysts to consider how they can apply these insights to optimize their own data processes.

Introduction

Building an effective ETL pipeline is essential for organizations aiming to leverage data in their decision-making processes. As businesses confront an ever-growing influx of information from various sources, the capability to extract, transform, and load data efficiently becomes increasingly critical. This guide presents a comprehensive, step-by-step approach to constructing an ETL pipeline, enabling analysts to streamline their workflows and improve data quality.

However, numerous challenges may arise at each stage of implementation. What strategies can be employed to ensure a seamless transition from raw data to actionable insights?

Define ETL Pipelines and Their Importance

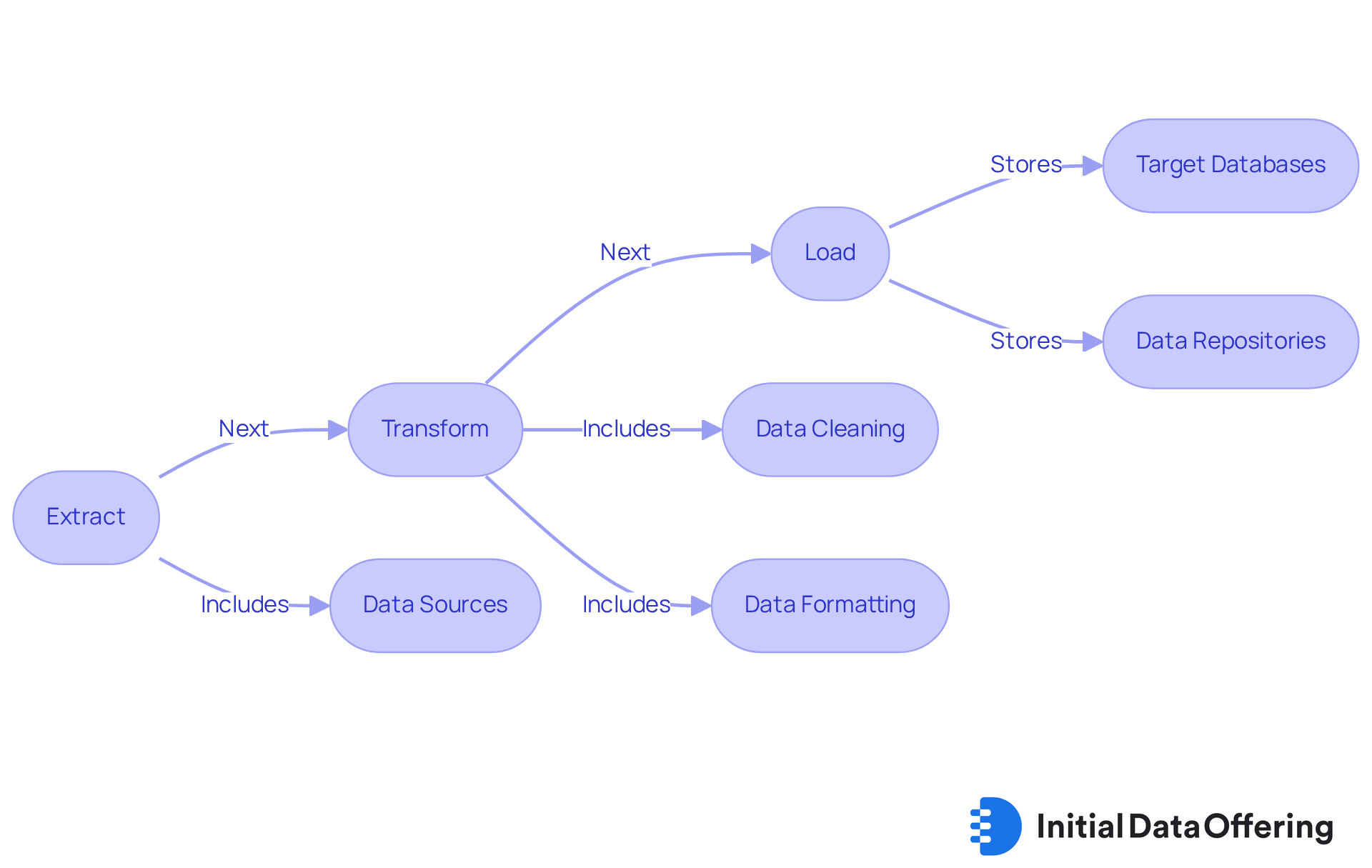

ETL, which stands for Extract, Transform, Load, is a vital integration process that involves extracting information from various sources, converting it into a suitable format, and subsequently loading it into a target database or repository. ETL processes are essential for organizations that depend on information-driven decision-making, as they ensure that information is accurate, consistent, and readily accessible for analysis. By automating these processes, organizations can save time and reduce errors, significantly enhancing their ability to derive actionable insights from data.

The significance of ETL pipelines is underscored by their capacity to amalgamate information from diverse sources, enabling analysts to create a unified perspective of content. This functionality is particularly crucial in today's information-rich landscape, where organizations must navigate extensive data to extract valuable insights. Without a well-defined ETL process, information silos may develop, resulting in inefficiencies and lost analytical opportunities. For example, The Valley Hospital successfully reduced API code writing time from four weeks to just one day by implementing ETL integration, which not only streamlined operations but also enhanced healthcare decisions and patient experiences.

Moreover, organizations such as Paycor have centralized their information extraction using a Snowflake-based SQL repository, saving over 36,000 analyst hours and transitioning to daily reporting for improved visibility. These real-world examples illustrate the substantial impact of ETL pipelines on information-driven decision-making, allowing businesses to utilize their resources more effectively and maintain a competitive edge. The integration market is projected to grow from $15.18 billion in 2024 to $30.27 billion by 2030, highlighting the increasing importance of ETL methods. Additionally, the average annual cost of poor information is estimated at approximately $12.9 million per organization, emphasizing the necessity of efficient ETL processes in maintaining information quality and supporting decision-making. As Jeffrey Richman notes, "For professionals who rely on business intelligence and data-driven insights, these channels are the crucial component.

Step-by-Step Process to Build an ETL Pipeline

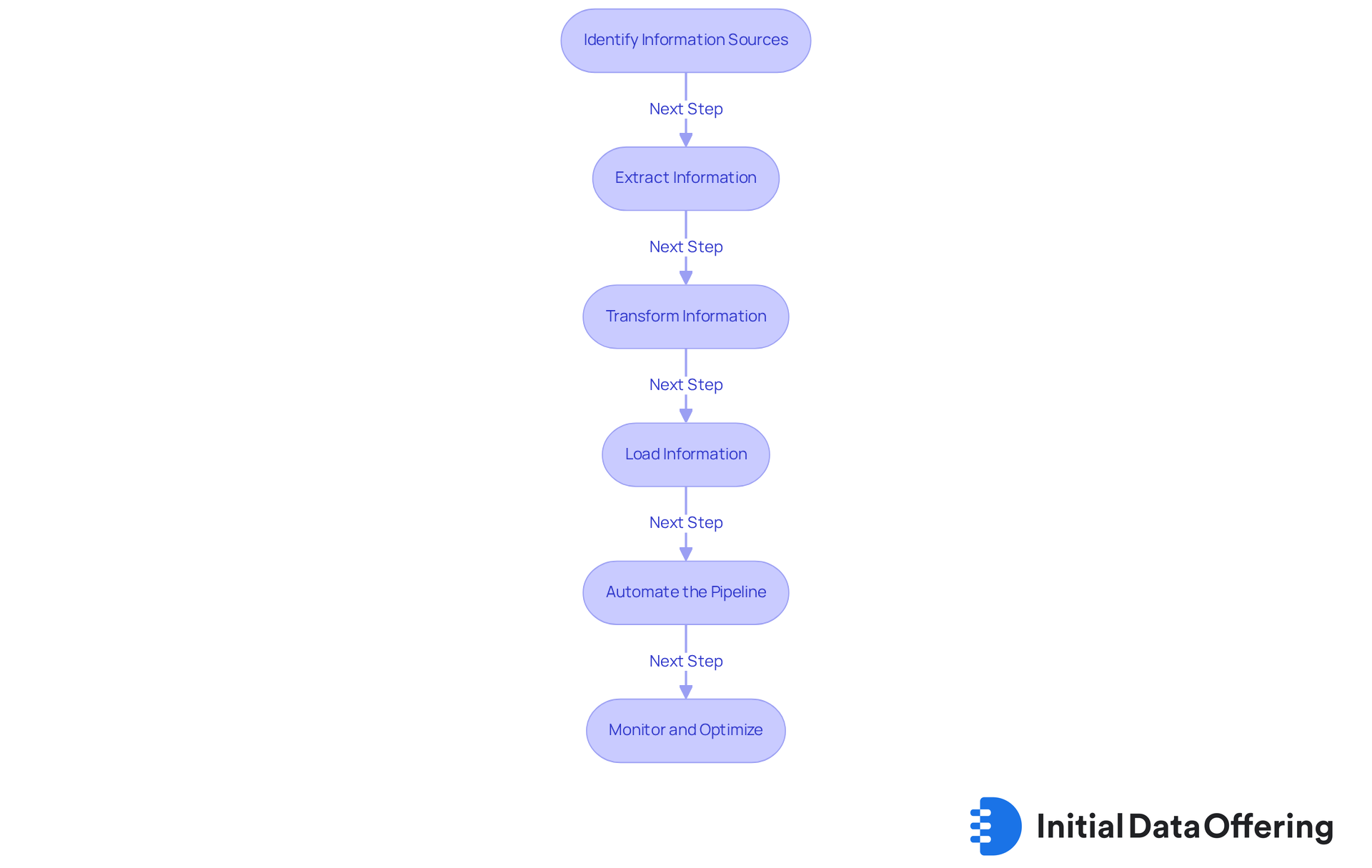

Building an ETL pipeline involves several critical steps that ensure data is efficiently processed and utilized:

-

Identify Information Sources: Establish the origins of your information. This may include databases, APIs, flat files, or cloud storage solutions. Entities excelling in information integration often utilize a variety of sources to enhance their datasets, ensuring a thorough view of their operations.

-

Extract Information: Utilize appropriate tools or scripts to extract information from the identified sources. It is essential to have the necessary permissions and access rights to ensure compliance and security. Tools such as Apache NiFi and Talend are favored for their strong extraction capabilities, enabling effortless retrieval from various sources.

-

Transform Information: Once extracted, cleanse and transform the information to meet your analysis requirements. This step may involve filtering out irrelevant information, converting types, or aggregating details. Implementing information validation during this phase is essential, as it helps preserve quality and lowers the likelihood of mistakes downstream. As Joo Ann Lee remarked, 'Data science isn't focused on the volume of information but instead on the quality,' highlighting the significance of high-quality information in the ETL pipeline process.

-

Load Information: After transformation, load the content into your target system, such as a data warehouse or database. It is crucial to guarantee that the loading procedure maintains data integrity, as inadequate loading practices can result in considerable discrepancies in analysis. ETL pipeline tools such as Informatica and Microsoft Azure Data Factory are designed to aid this procedure efficiently.

-

Automate the Pipeline: To maintain efficiency, implement automation tools that schedule and run your ETL processes regularly. Automation not only saves time but also guarantees that your information stays up-to-date without needing manual intervention. Organizations that adopt automated ETL solutions often report a 75% reduction in development time, enhancing overall productivity.

-

Monitor and Optimize: Continuously observe the performance of your ETL process. Identifying bottlenecks or errors early allows for timely optimizations, improving efficiency and reliability. Frequent problems like inconsistent source information and duplicates can impede performance. Thus, creating visibility in your processes can result in improved oversight of workflows, guaranteeing that your analytics stay precise and useful.

By adhering to these steps and integrating best practices, analysts can create strong ETL pipelines that enhance analytics capabilities and promote informed decision-making.

Identify Challenges and Solutions in ETL Implementation

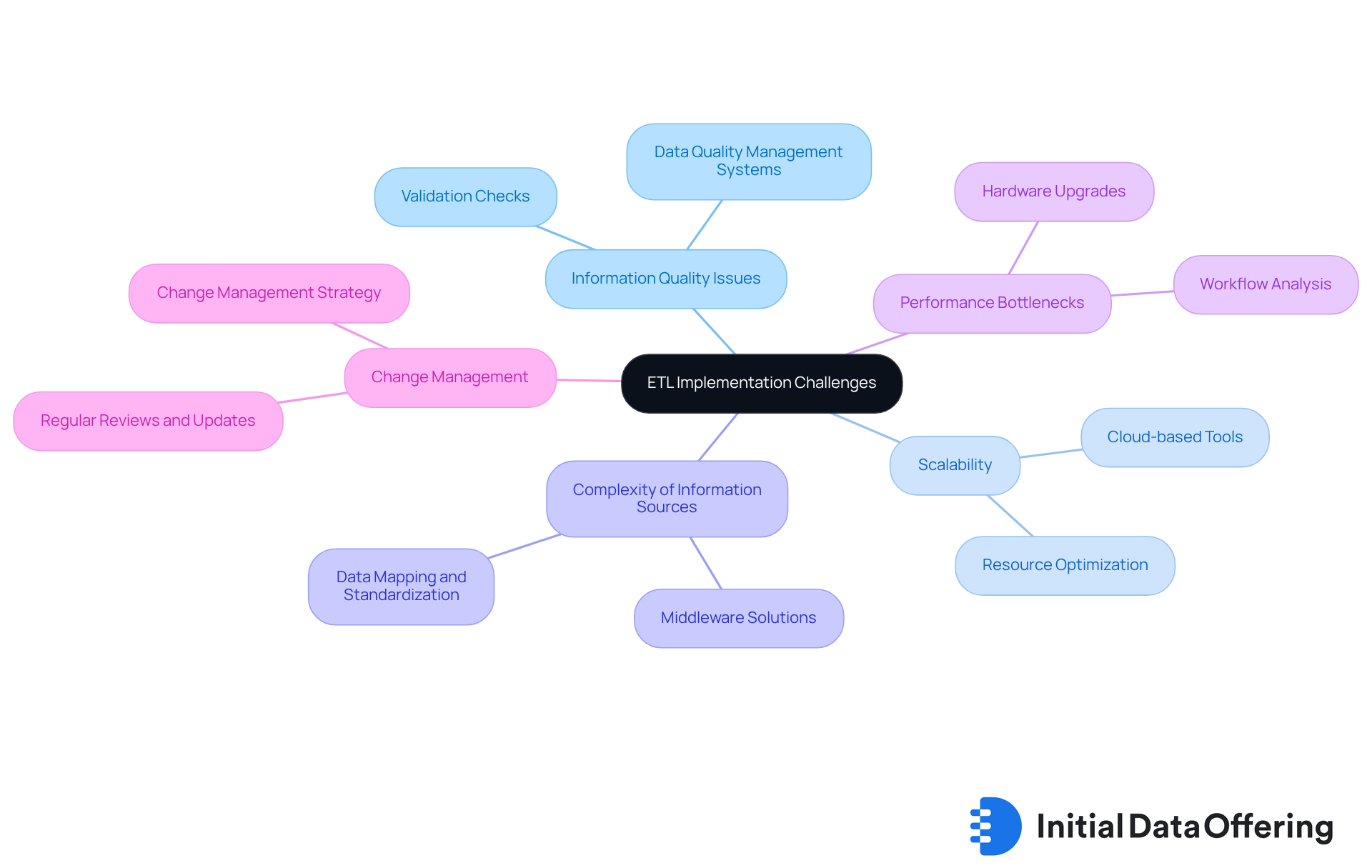

Implementing an ETL pipeline can present several challenges that require careful consideration to ensure effective data management.

-

Information Quality Issues: Inconsistent or erroneous information can lead to flawed analyses. To mitigate this risk, it is essential to execute validation checks during the transformation phase, thereby ensuring information integrity and enhancing the reliability of your data-driven decisions.

-

Scalability: As information volumes increase, pipelines may struggle to keep pace. Utilizing cloud-based ETL pipeline tools can be advantageous, as they are designed to expand in accordance with your information requirements, thereby maintaining performance even as data grows.

-

Complexity of Information Sources: Combining information from various origins can be intricate and time-consuming. To streamline this task, consider employing middleware or an ETL pipeline that can accommodate diverse formats and protocols, facilitating smoother integration of data.

-

Performance Bottlenecks: Slow processing times can hinder timely decision-making, which is critical in today's fast-paced environment. By analyzing your ETL pipeline workflows, you can identify slow-performing queries or transformations and enhance the overall efficiency of your processes.

-

Change Management: As business needs evolve, so too must your ETL processes. Establishing a change management strategy that includes regular reviews and updates to your ETL pipeline will ensure that the ETL pipeline remains aligned with new data sources or requirements, ultimately supporting your organization's adaptability.

Conclusion

Building a robust ETL pipeline is not merely a technical endeavor; it is a strategic necessity for organizations that thrive on data-driven insights. The process of extracting, transforming, and loading data ensures that information is accurate, consistent, and readily available for analysis. This empowers analysts to make informed decisions. By implementing effective ETL pipelines, businesses can eliminate information silos and enhance their operational efficiency, ultimately leading to a competitive advantage in a data-centric world.

Several key steps are essential for constructing an effective ETL pipeline. These include:

- Identifying information sources

- Extracting and transforming data

- Loading it into appropriate systems

- Automating the processes for efficiency

- Continuously monitoring performance

Each step is crucial for maintaining data integrity and ensuring that the pipeline adapts to changing business needs. Additionally, common challenges such as information quality issues and performance bottlenecks are highlighted, along with practical solutions to address these obstacles.

In conclusion, the importance of establishing a well-defined ETL pipeline cannot be overstated. As organizations increasingly rely on data for strategic decision-making, investing in effective ETL processes will enhance analytical capabilities and safeguard information quality. Embracing best practices in ETL development is essential for analysts seeking to harness the full potential of their data. By proactively addressing challenges and optimizing their ETL workflows, organizations can unlock valuable insights and drive successful outcomes in their data initiatives.

Frequently Asked Questions

What does ETL stand for and what is its purpose?

ETL stands for Extract, Transform, Load. Its purpose is to integrate data by extracting information from various sources, converting it into a suitable format, and loading it into a target database or repository.

Why are ETL processes important for organizations?

ETL processes are important because they ensure that information is accurate, consistent, and readily accessible for analysis, which is essential for information-driven decision-making. They help organizations save time, reduce errors, and enhance their ability to derive actionable insights from data.

How do ETL pipelines help in data analysis?

ETL pipelines help in data analysis by amalgamating information from diverse sources, enabling analysts to create a unified perspective of content. This is crucial for extracting valuable insights from extensive data in today's information-rich landscape.

What can happen if an organization does not have a well-defined ETL process?

Without a well-defined ETL process, information silos may develop, leading to inefficiencies and lost analytical opportunities.

Can you provide an example of how ETL integration has benefited an organization?

The Valley Hospital reduced API code writing time from four weeks to just one day by implementing ETL integration, which streamlined operations and enhanced healthcare decisions and patient experiences.

What impact did Paycor experience from centralizing their information extraction?

Paycor centralized their information extraction using a Snowflake-based SQL repository, saving over 36,000 analyst hours and transitioning to daily reporting for improved visibility.

What is the projected growth of the integration market related to ETL methods?

The integration market is projected to grow from $15.18 billion in 2024 to $30.27 billion by 2030, indicating the increasing importance of ETL methods.

What is the estimated annual cost of poor information for organizations?

The average annual cost of poor information is estimated at approximately $12.9 million per organization, highlighting the necessity of efficient ETL processes in maintaining information quality and supporting decision-making.