4 Best Practices for Optimizing Data Pipelines Effectively

4 Best Practices for Optimizing Data Pipelines Effectively

Overview

The article outlines four best practices for optimizing data pipelines effectively. These practices focus on:

- Understanding pipeline fundamentals

- Exploring different types

- Implementing monitoring and governance strategies

- Identifying common challenges

Selecting the right pipeline type based on operational needs is crucial; it ensures that the data pipeline aligns with specific business objectives. Moreover, ensuring data quality through governance is essential to maintain integrity and reliability. Addressing challenges such as integration complexities can significantly enhance operational efficiency and lead to better decision-making.

How can these strategies be applied in your organization to improve data handling? By implementing these best practices, organizations can navigate the complexities of data management more effectively.

Introduction

Data pipelines serve as the backbone of modern data management, facilitating the seamless transfer of information from various sources to actionable insights. As data continues to grow exponentially, organizations are increasingly pressed to optimize these pipelines for enhanced performance and reliability. This optimization not only involves addressing quality control but also tackling integration complexities that can arise.

How can organizations effectively navigate these hurdles to streamline their data operations and fully leverage the potential of their data assets? By understanding the features of data pipelines, organizations can appreciate their advantages in driving informed decision-making and ultimately reaping the benefits of improved operational efficiency.

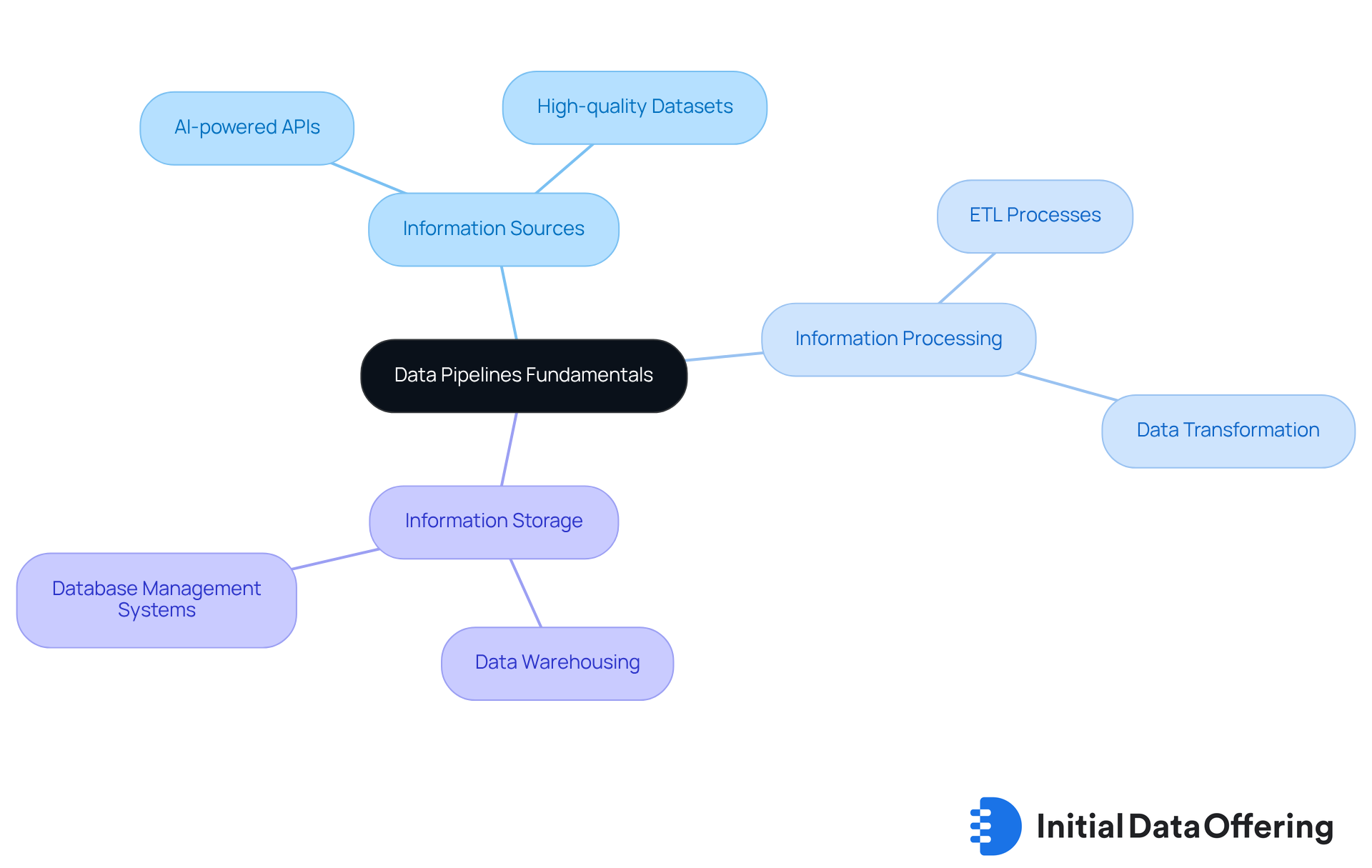

Understand the Fundamentals of Data Pipelines

Data pipelines are automated systems designed to facilitate the transfer of information from one or more sources to a destination, typically involving processes such as extraction, transformation, and loading (ETL). Understanding the key elements—information sources, information processing, and information storage—is crucial. Each element plays a vital role in ensuring information quality and accessibility.

For instance, Initial Information Offering leverages AI-powered APIs, enhancing operational efficiency through the provision of high-quality datasets and advanced entity resolution capabilities. A well-organized system not only enhances information flow but also decreases latency and boosts overall operational efficiency.

Recognizing these fundamentals empowers organizations to develop more effective information strategies, particularly through the use of , that align with their business objectives, especially when integrating AI-driven solutions.

How might these insights influence your approach to information management?

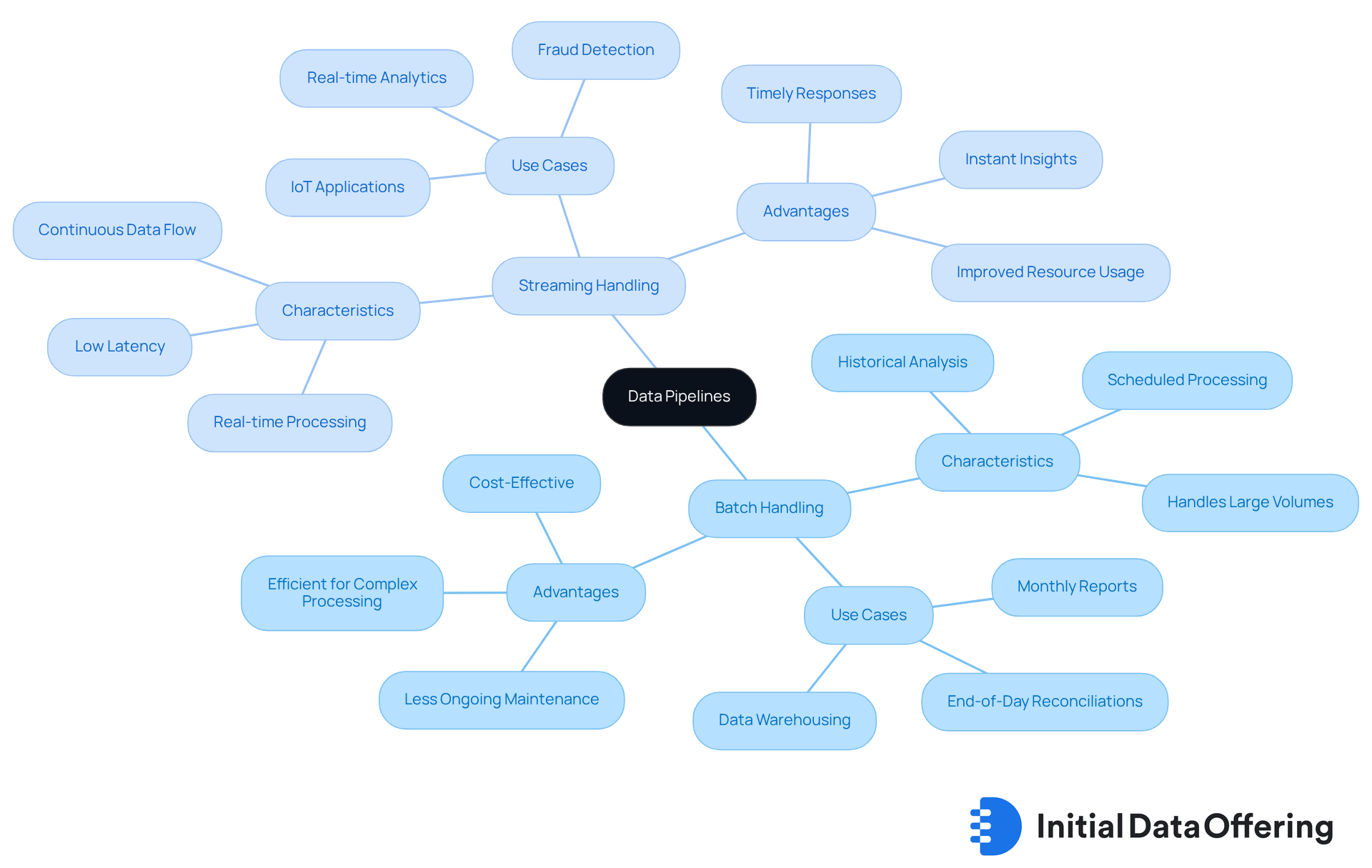

Explore Different Types of Data Pipelines

Data pipelines can be classified into two main categories: batch handling and stream handling. Batch workflows are designed to manage substantial amounts of information at set times. This feature makes them suitable for situations where immediate information is not essential. For instance, financial institutions frequently employ batch processing to produce monthly reports or conduct end-of-day reconciliations. This approach enables them to analyze historical information effectively.

On the other hand, streaming systems manage information continuously as it arrives. This advantage allows for real-time analytics and instant insights, which are crucial for applications requiring timely responses. A prime example is real-time fraud detection, where financial institutions can monitor transactions and respond to suspicious activities instantly.

The selection between these two types of conduits depends on specific operational requirements and the characteristics of data pipelines. Industry leaders emphasize that while batch operations excel at managing extensive historical information, streaming techniques are vital for situations that necessitate prompt insights and immediate actions. Moreover, companies produce more than 2.5 quintillion bytes of information each day, underscoring the volume of data pipelines that must be managed by both types of conduits.

It's important to note that streaming processing requires specialized infrastructure to manage continuous streams and high-performance computing resources. This necessity can present operational challenges for entities. By recognizing these differences and current trends, including the hybrid method to information architectures that merges both flow types, organizations can strategically choose the most suitable information flow type. This choice ultimately enhances their and decision-making capabilities.

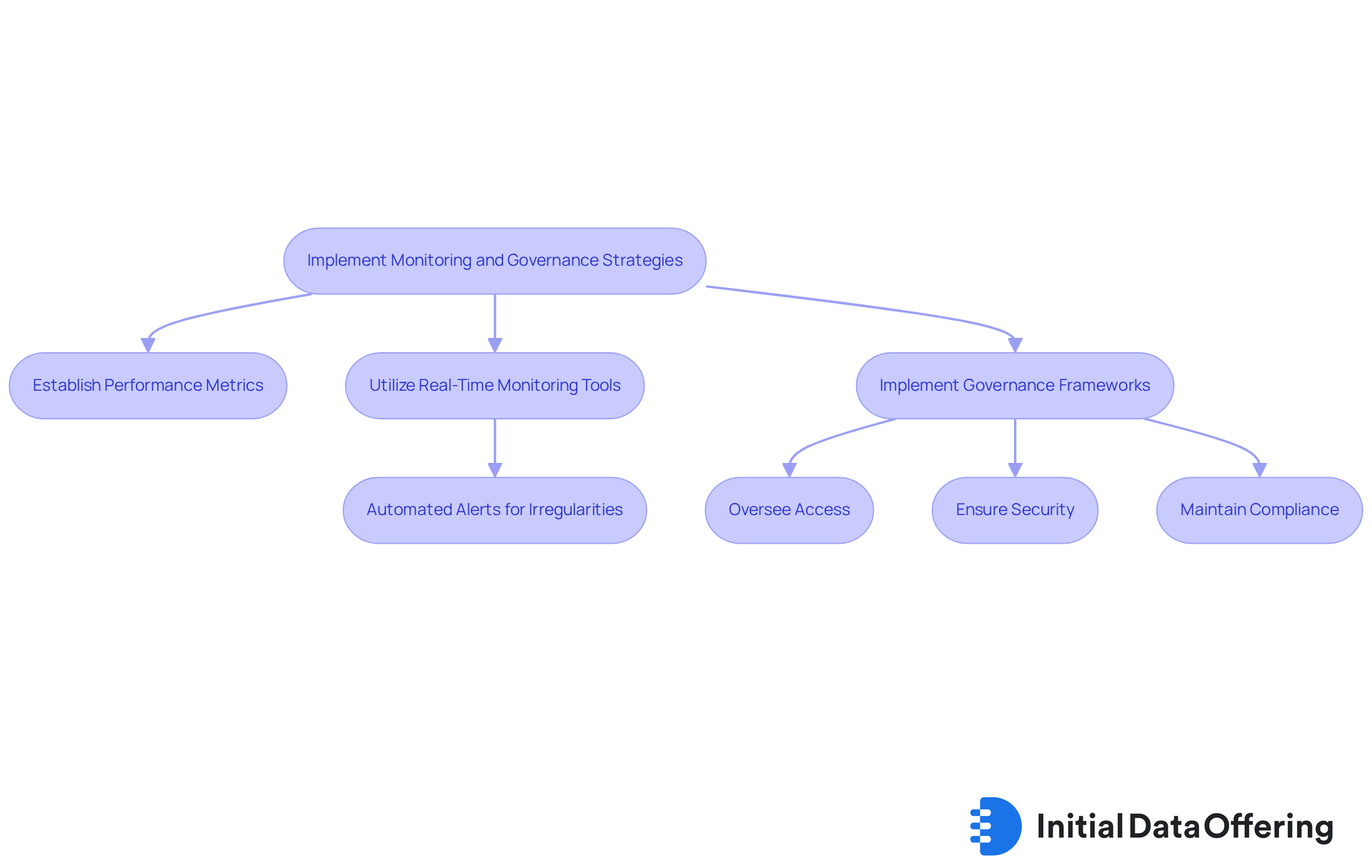

Implement Monitoring and Governance Strategies

To ensure the reliability and quality of data pipelines, organizations must implement robust monitoring and . This involves establishing clear metrics for performance, such as throughput and error rates, and utilizing tools for real-time monitoring. Research indicates that information quality issues cost companies 31% of their revenue, underscoring the critical need for effective governance in information management.

Furthermore, governance frameworks should oversee access, security, and compliance. For instance, automated alerts can assist teams in swiftly recognizing and resolving irregularities in information flow. As noted by TDWI, 50% of teams dedicate more than 61% of their time to integration tasks, highlighting the operational burden that governance aims to alleviate.

By emphasizing oversight and governance, organizations can enhance the resilience of their data pipelines and uphold high standards of quality. Additionally, those that embrace cloud-based information channels can achieve a 3.7x ROI, illustrating the potential benefits of implementing these strategies.

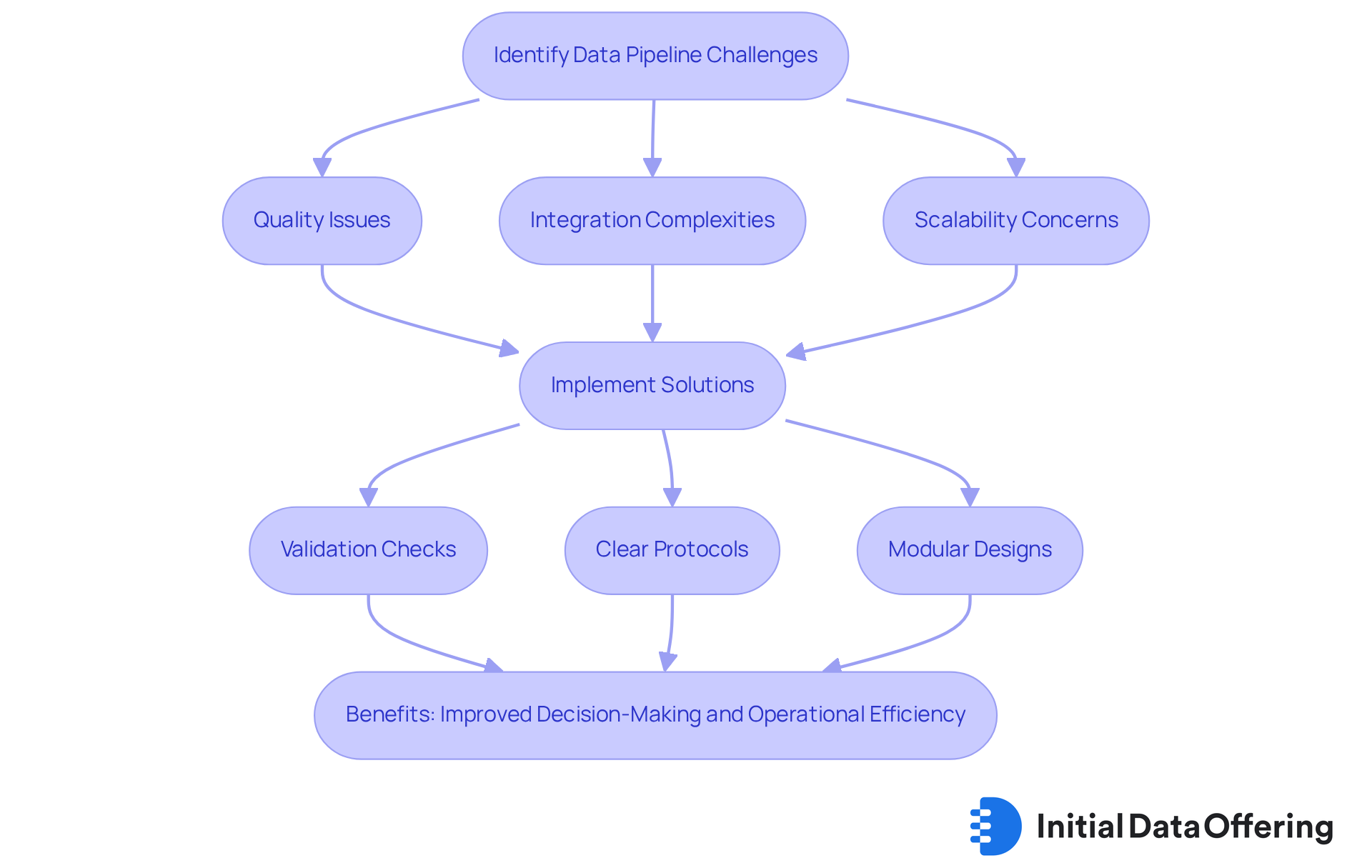

Identify and Overcome Common Data Pipeline Challenges

Frequent obstacles in data pipelines include quality issues, integration complexities, and scalability concerns. These challenges can significantly impact data integrity. For instance, schema modifications often interrupt the flow of information, leading to incomplete or flawed datasets. To address these issues, organizations should implement validation checks and establish clear protocols for managing . This proactive approach not only mitigates risks but also ensures that data remains reliable and accessible.

Moreover, embracing modular designs enhances flexibility and scalability. By enabling teams to adapt to evolving information needs, organizations can better respond to changes in the data landscape. How can your organization benefit from such adaptable structures? By identifying and addressing these challenges, companies can streamline their data operations and ensure the integrity of their data pipelines. Ultimately, this leads to improved decision-making and operational efficiency.

Conclusion

Understanding and optimizing data pipelines is essential for organizations striving to enhance their operational efficiency and data management strategies. By mastering the fundamentals of data pipelines, companies can streamline information flow and align their processes with business objectives, particularly in the context of AI integration. This alignment not only facilitates better data handling but also enhances the overall strategic direction of the organization.

The article highlights the significance of recognizing different types of data pipelines, such as batch and streaming systems, and the necessity of implementing robust monitoring and governance strategies. Addressing common challenges faced in data pipeline management is crucial; proactive measures must be taken to ensure data integrity and accessibility. By adopting modular designs and effective governance frameworks, organizations can navigate the complexities of data management, ultimately improving their decision-making capabilities.

Ultimately, the effective optimization of data pipelines is not just a technical requirement; it is a strategic imperative that can significantly impact an organization’s success. By embracing best practices and innovative solutions, businesses can unlock the full potential of their data. This not only drives growth but also enhances their competitive edge in an increasingly data-driven landscape. How can your organization leverage these insights to enhance its data management strategies and achieve greater operational efficiency?

Frequently Asked Questions

What are data pipelines?

Data pipelines are automated systems designed to facilitate the transfer of information from one or more sources to a destination, typically involving processes such as extraction, transformation, and loading (ETL).

What are the key elements of data pipelines?

The key elements of data pipelines include information sources, information processing, and information storage. Each element is crucial for ensuring information quality and accessibility.

How does Initial Information Offering enhance operational efficiency?

Initial Information Offering leverages AI-powered APIs to provide high-quality datasets and advanced entity resolution capabilities, which enhances operational efficiency.

What benefits do well-organized data pipelines provide?

A well-organized data pipeline enhances information flow, decreases latency, and boosts overall operational efficiency.

How can understanding data pipelines influence information management strategies?

Recognizing the fundamentals of data pipelines empowers organizations to develop more effective information strategies that align with their business objectives, particularly when integrating AI-driven solutions.