10 Essential Commands of DDL for Effective Database Management

10 Essential Commands of DDL for Effective Database Management

Overview

The article primarily focuses on the essential Data Definition Language (DDL) commands that are vital for effective database management. Mastering these commands—such as CREATE, ALTER, and DROP—provides users with the tools necessary to efficiently structure, modify, and maintain their databases. This mastery not only enhances data quality but also improves accessibility, facilitating informed decision-making in a data-driven landscape.

How can these DDL commands transform your database management practices? By understanding their features and advantages, users can leverage these commands to optimize their databases, ultimately leading to better data-driven outcomes.

Introduction

Mastering the essential commands of Data Definition Language (DDL) is crucial for effective database management, particularly in the context of Initial Data Offerings (IDO). These commands enable users to create, modify, and maintain high-quality datasets, ensuring that data remains well-structured and accessible for analysis. As the landscape of data management evolves, organizations must consider how to leverage these DDL commands to enhance operational efficiency and improve decision-making processes. This exploration delves into ten key DDL commands that not only streamline dataset management but also unlock new opportunities for data-driven insights.

Initial Data Offering: Streamline Your Dataset Management with DDL Commands

The commands of DDL serve as essential tools for managing databases effectively, especially for participants in Initial Data Offerings (IDO). These instructions enable users to create, modify, and oversee high-quality data collections with ease. By mastering the commands of DDL, users ensure that their data collections are well-structured, easily accessible, and primed for analysis. This not only enhances the value of the information provided on the platform but also supports market research analysts who rely on accurate and timely data for informed decision-making.

Furthermore, SavvyIQ's AI-driven data framework, which includes its Recursive Data Engine, empowers users to maximize the potential of their datasets. How can you leverage these capabilities to improve your data management processes? By utilizing the commands of DDL along with SavvyIQ's tools, you can streamline your data operations and enhance the quality of insights derived from your data. This combination of structured data management and advanced analytics is crucial for staying competitive in today's data-driven landscape.

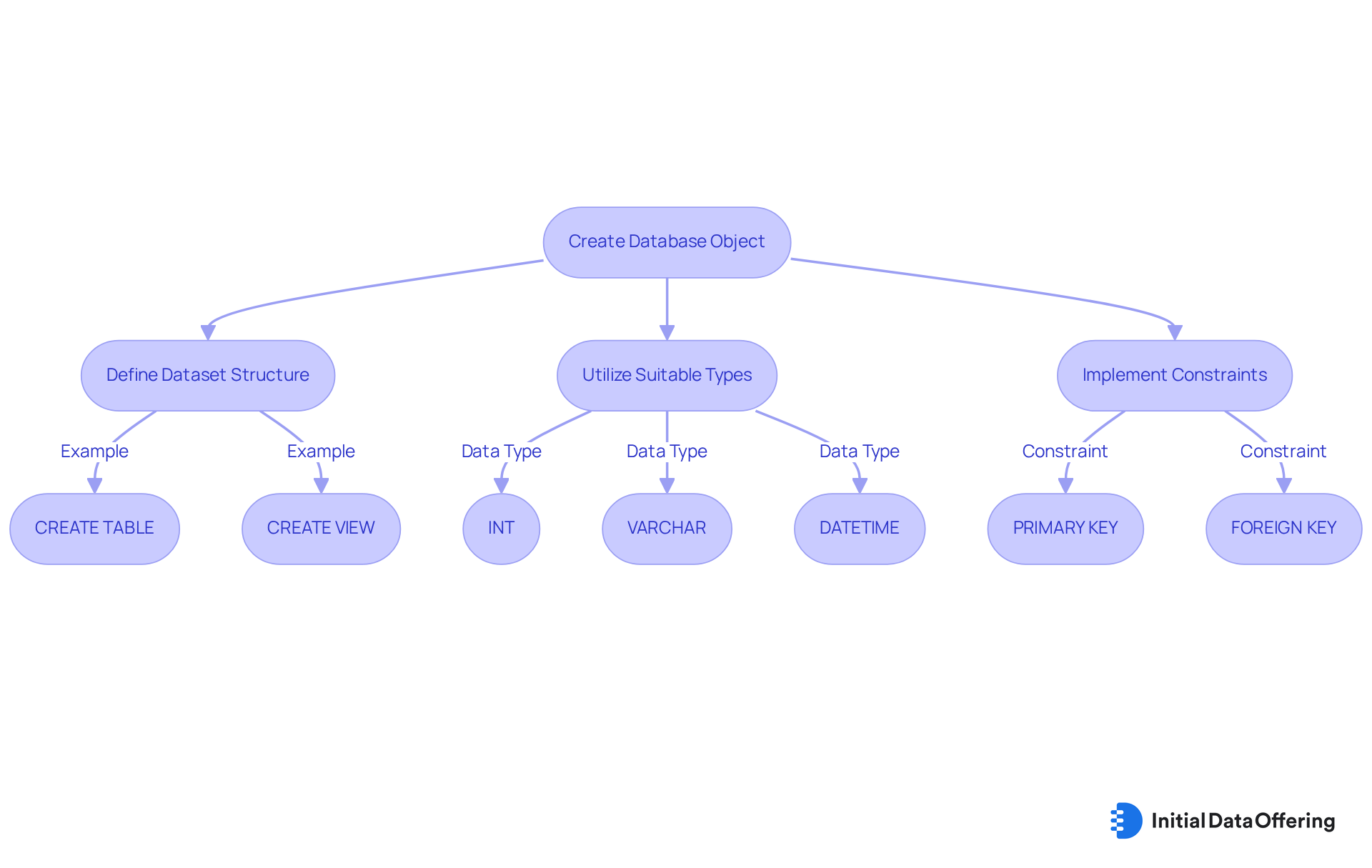

CREATE Command: Establish New Database Objects Efficiently

The CREATE instruction is fundamental for establishing new database objects, including tables, views, and indexes. It serves as a critical tool for individuals introducing a new dataset on the IDO platform, allowing them to carefully outline the dataset's structure. This process encompasses defining information types, constraints, and relationships, which are vital for ensuring that the dataset is well-structured and optimized for effective querying and analysis.

For example, a user might execute an instruction like CREATE TABLE TikTokSentiment (id INT PRIMARY KEY, sentiment VARCHAR(255), timestamp DATETIME); to create a structured table for TikTok Consumer Sentiment Data. This not only promotes the integrity of information but also enhances the dataset's usability for analytics.

Database administrators emphasize the importance of the CREATE instruction, asserting that it lays the foundation for effective information management. As one specialist noted, 'A clearly defined dataset framework is crucial for efficient information retrieval and analysis.'

Optimal methods for employing the CREATE instruction involve clearly defining the dataset's structure, utilizing suitable types, and implementing constraints to uphold quality. Current trends indicate a growing emphasis on automation and cloud-based solutions for database object creation, which streamline the process and improve accessibility. With the worldwide dataset marketplace platform sector anticipated to expand at a CAGR of 25.2% from 2025 to 2030, the capability to effectively create and manage datasets will become increasingly crucial for individuals seeking to leverage information for informed decision-making. Furthermore, with North America leading the market, the significance of the CREATE function in a competitive landscape cannot be overstated.

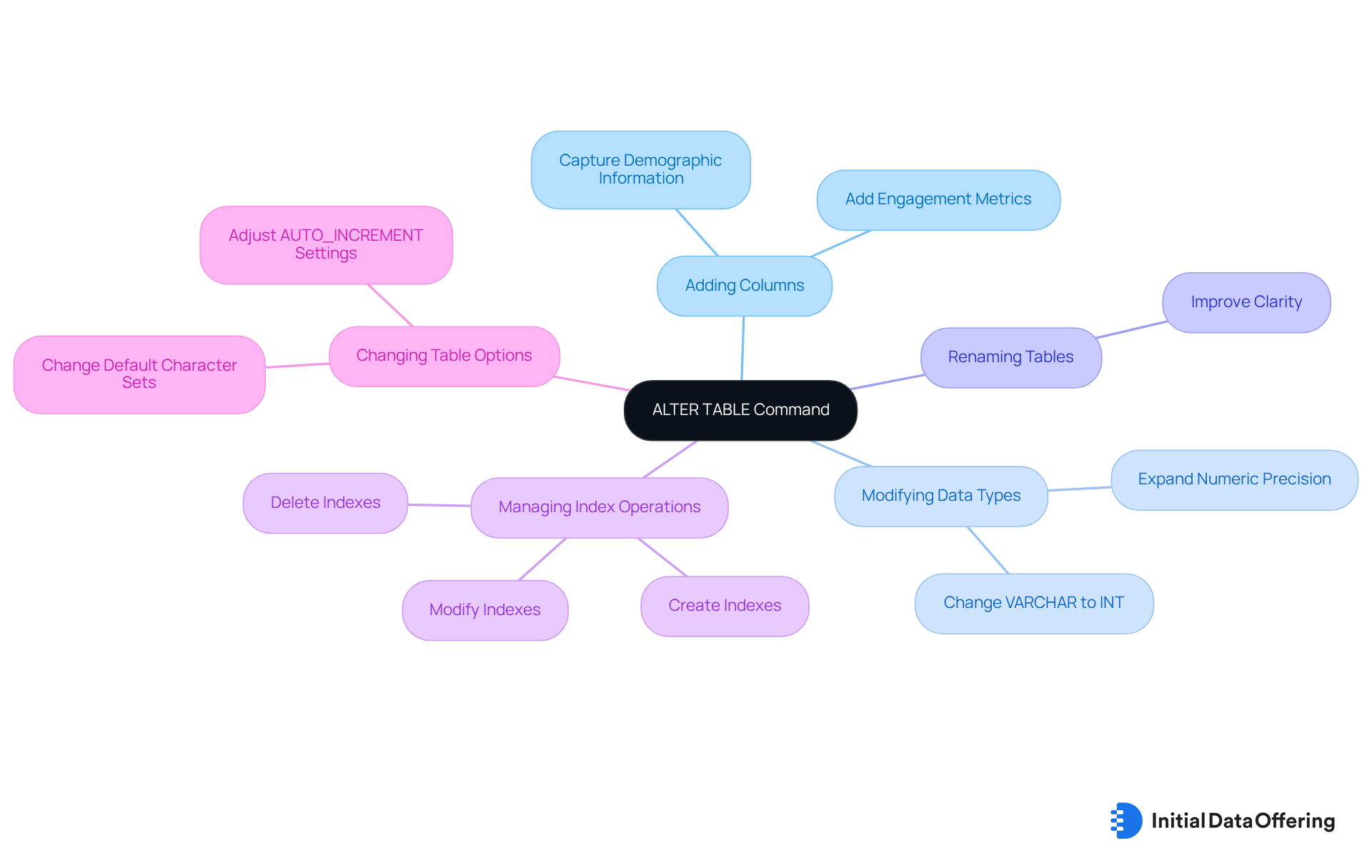

ALTER Command: Modify Database Structures as Needed

The ALTER TABLE instruction is essential for modifying existing database frameworks, allowing users to adapt to changing information needs with ease. This command facilitates the addition of new columns, modification of data types, and even the renaming of tables, ensuring that datasets remain relevant and comprehensive. For instance, if a dataset focused on consumer sentiment requires new metrics, the ALTER TABLE instruction can be employed to enhance its structure without compromising data integrity. This adaptability is crucial in 2025, as organizations increasingly rely on accurate and up-to-date information to inform their strategies.

Common applications of the ALTER TABLE command include:

- Adding new columns to capture emerging data points, such as demographic information or engagement metrics.

- Modifying existing types to accommodate changes in precision, such as expanding a numeric column’s precision to better reflect financial figures.

- Renaming columns to improve clarity and relevance, ensuring that the dataset aligns with current analytical needs.

- Managing index operations, including creating, deleting, or modifying indexes, which enhances database management.

- Changing table options, such as AUTO_INCREMENT settings and default character sets, to align with evolving application requirements.

It is important to recognize that ALTER TABLE operations can be slower for large tables, as they involve creating a copy of the table, applying changes, and then swapping it back. By leveraging the ALTER TABLE instruction effectively, organizations can maintain robust data collections that support informed decision-making and strategic planning. Collections available on IDO, such as TikTok Consumer Sentiment Data and Corporate Carbon Footprint ESG Data, exemplify the practical application of these modifications in enhancing data accessibility and visibility.

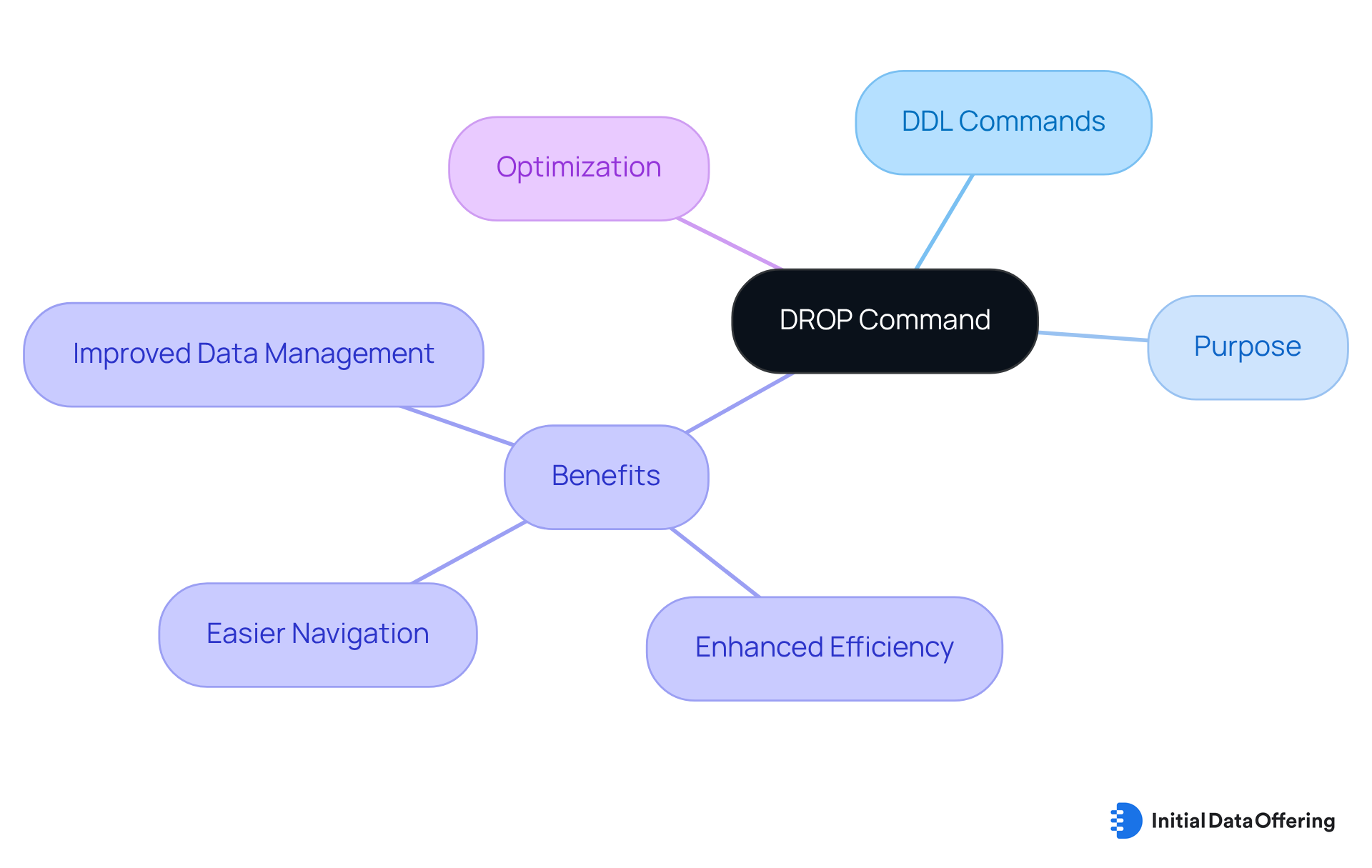

DROP Command: Remove Unnecessary Database Objects Safely

The commands of DDL include the DROP instruction, which serves as a vital tool for individuals looking to securely eliminate database items that are no longer required. This feature allows for the removal of outdated data collections or tables that may clutter the database, ultimately enhancing its efficiency. By thoughtfully utilizing the DROP instruction as outlined in the commands of DDL, users can maintain a streamlined database environment. This not only facilitates easier navigation but also significantly improves data management. Have you considered how optimizing your database can lead to improved performance in your daily operations?

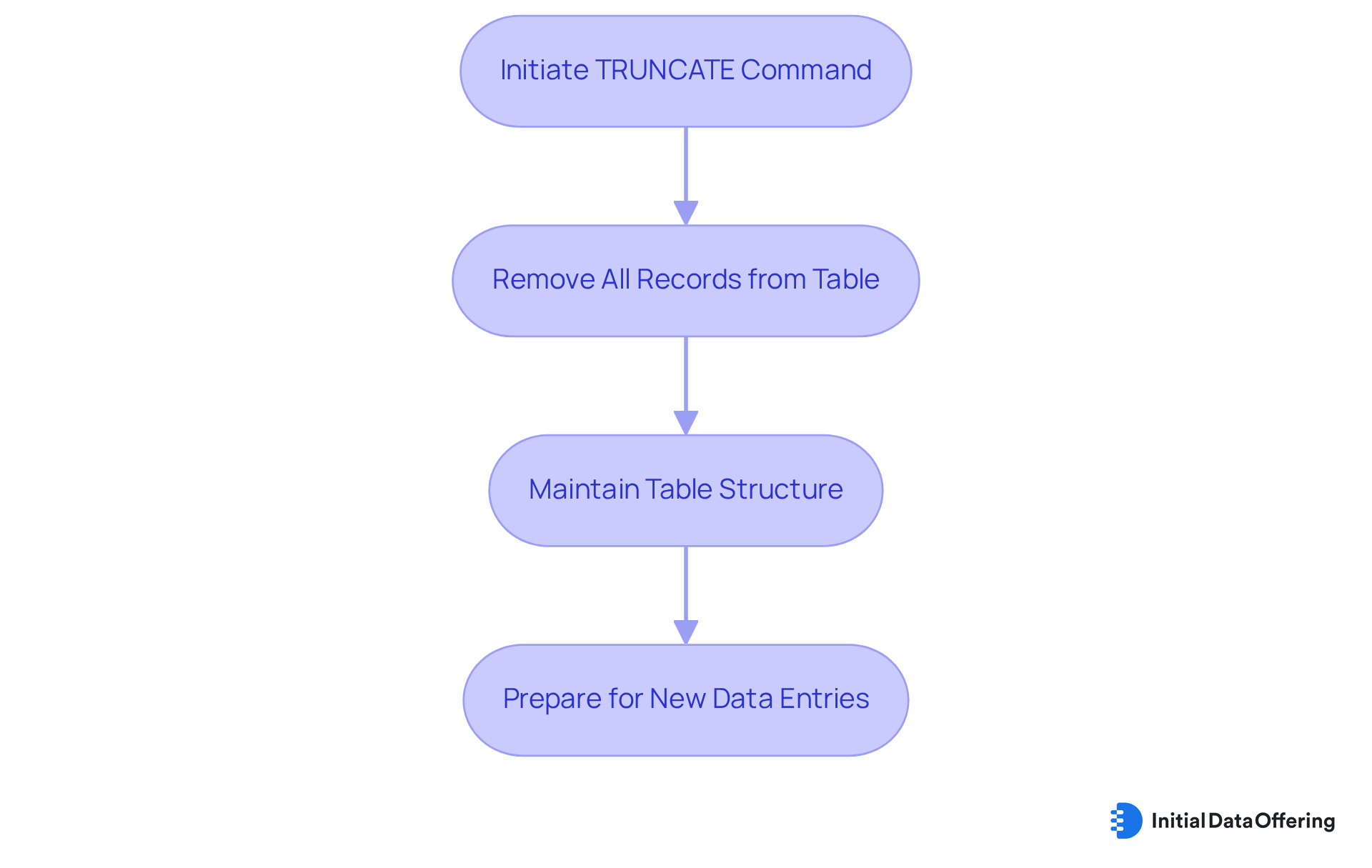

TRUNCATE Command: Efficiently Clear Table Data

The TRUNCATE command, which is part of the commands of DDL, serves as a powerful tool for swiftly removing all records from a table while maintaining its underlying structure. This feature is particularly advantageous for datasets that undergo frequent updates, such as consumer sentiment information.

By utilizing the commands of DDL, users can efficiently eliminate outdated data with TRUNCATE, thereby preparing the table for new entries without the cumbersome task of deleting records one by one.

How can this capability streamline your data management processes? Utilizing the commands of DDL such as TRUNCATE not only enhances efficiency but also ensures your dataset remains relevant and up-to-date, ultimately supporting informed decision-making.

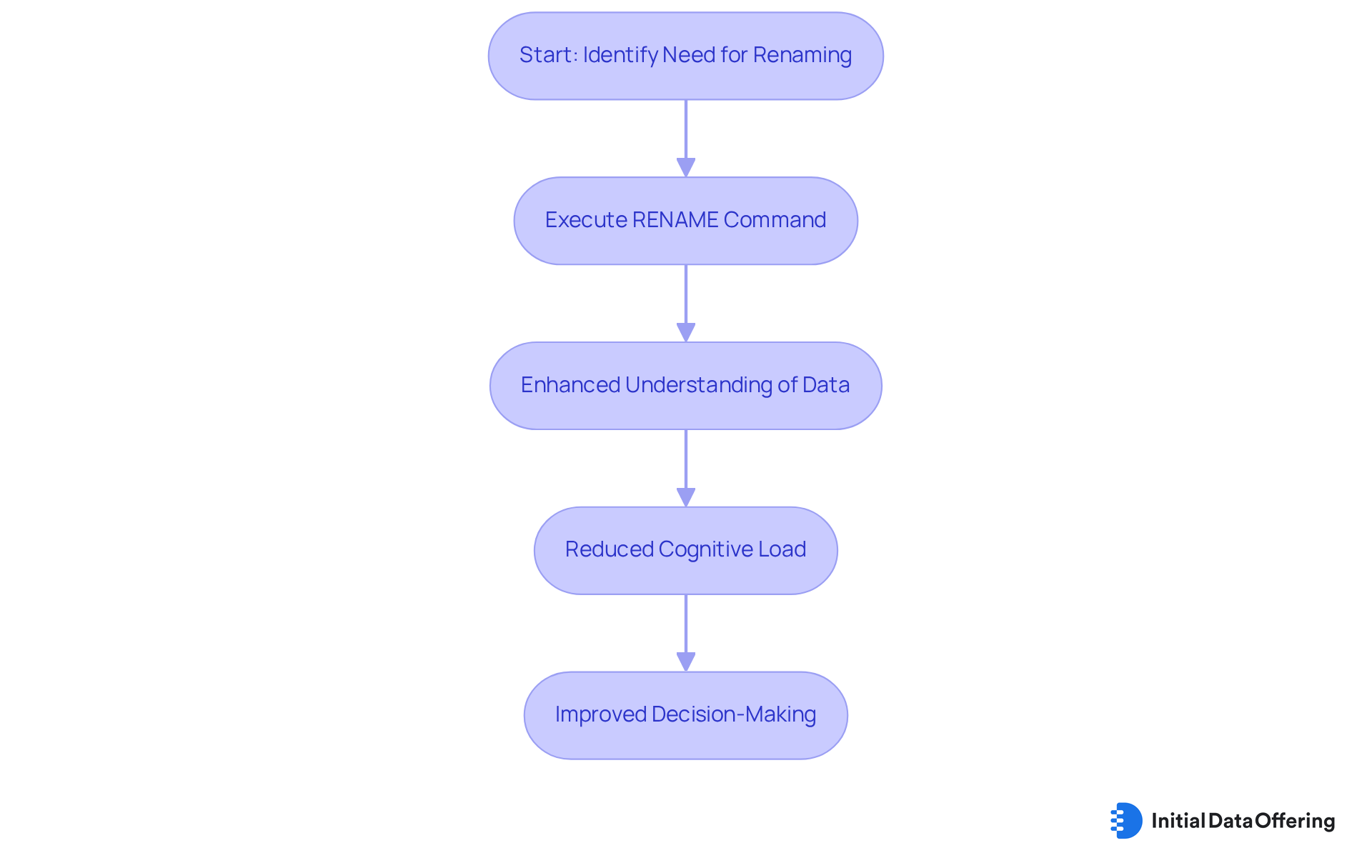

RENAME Command: Update Object Names for Better Clarity

The RENAME command serves as a powerful tool that enables individuals to modify the names of database objects, including tables and columns. This functionality is crucial when original names fail to accurately represent the evolving information they encompass. For instance, as datasets expand to incorporate new metrics, renaming tables can significantly enhance understanding of their contents, thereby improving overall usability.

Current trends indicate that clear naming conventions are increasingly vital in information management. Experts emphasize that well-defined names not only facilitate simpler navigation but also reduce the cognitive load on users, leading to more efficient information analysis. As noted by industry leaders, the clarity of dataset names can directly influence the effectiveness of data-driven decision-making. In fact, statistics reveal that individuals spend 60% to 80% of their time searching for data, underscoring the critical necessity for clear naming conventions.

Looking ahead to 2025, the RENAME command is anticipated to play a pivotal role in enhancing dataset usability. By adopting clear and descriptive naming practices, organizations can ensure that their datasets remain accessible and meaningful. For example, a dataset originally titled 'SalesData' might be renamed to '2025_Quarterly_Sales_Analysis' to reflect its specific focus and timeframe, making it immediately clear to users what insights they can derive from it. This practice not only enhances usability but also fosters a culture of information literacy within organizations, empowering individuals to engage with information confidently and effectively. As Piyanka Jain highlights, data literacy is essential as data becomes the new currency in business.

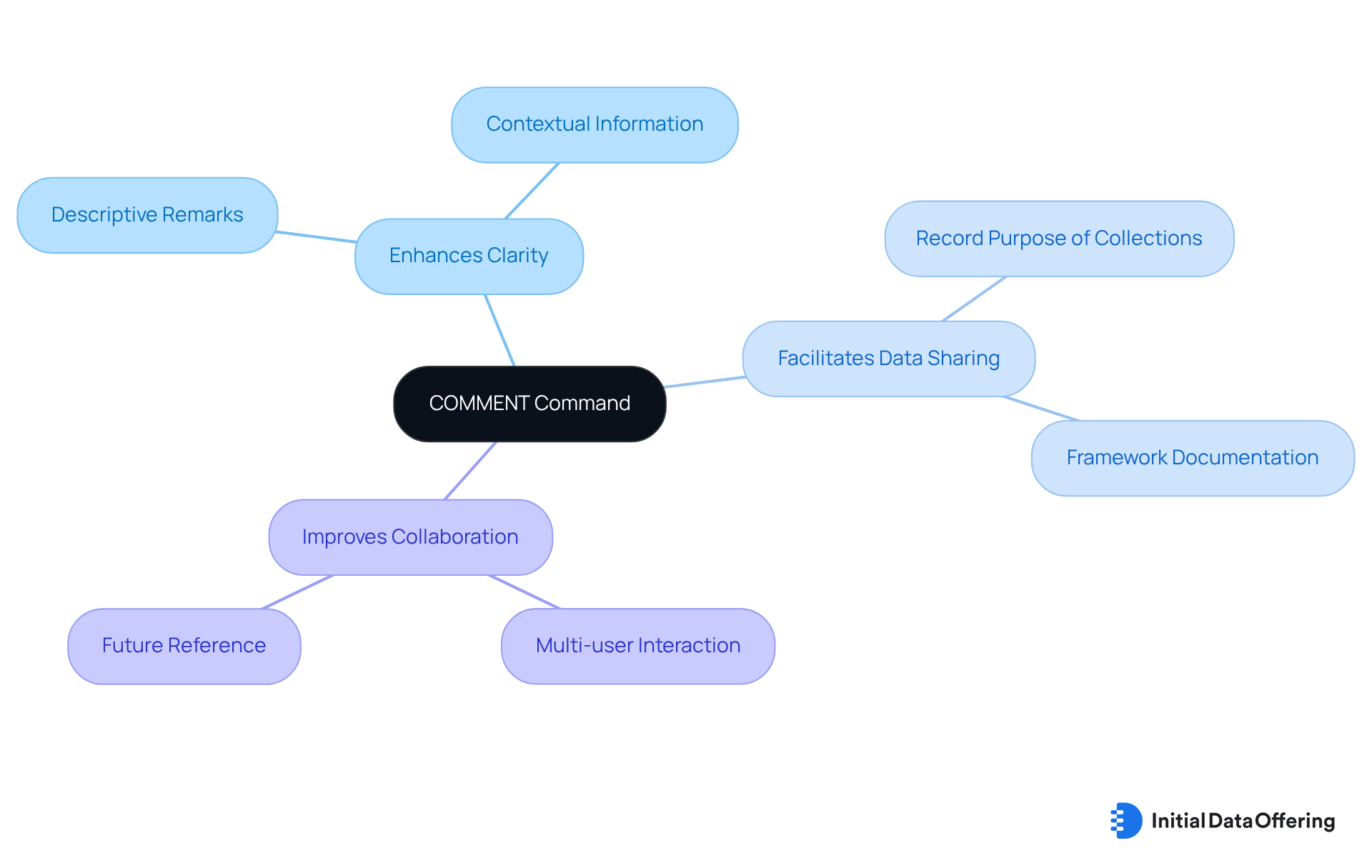

COMMENT Command: Enhance Database Documentation

The commands of ddl, specifically the COMMENT command, serve as a vital feature that enables individuals to add descriptive remarks to database objects. This capability offers significant advantages in cooperative environments, such as IDO, where multiple users may interact with the same data. By providing context and explanations for future reference, the COMMENT command enhances clarity. Users can effectively record the purpose and framework of collections, thereby facilitating better data sharing and collaboration.

How might the use of the COMMENT command improve your team's communication and understanding of shared data?

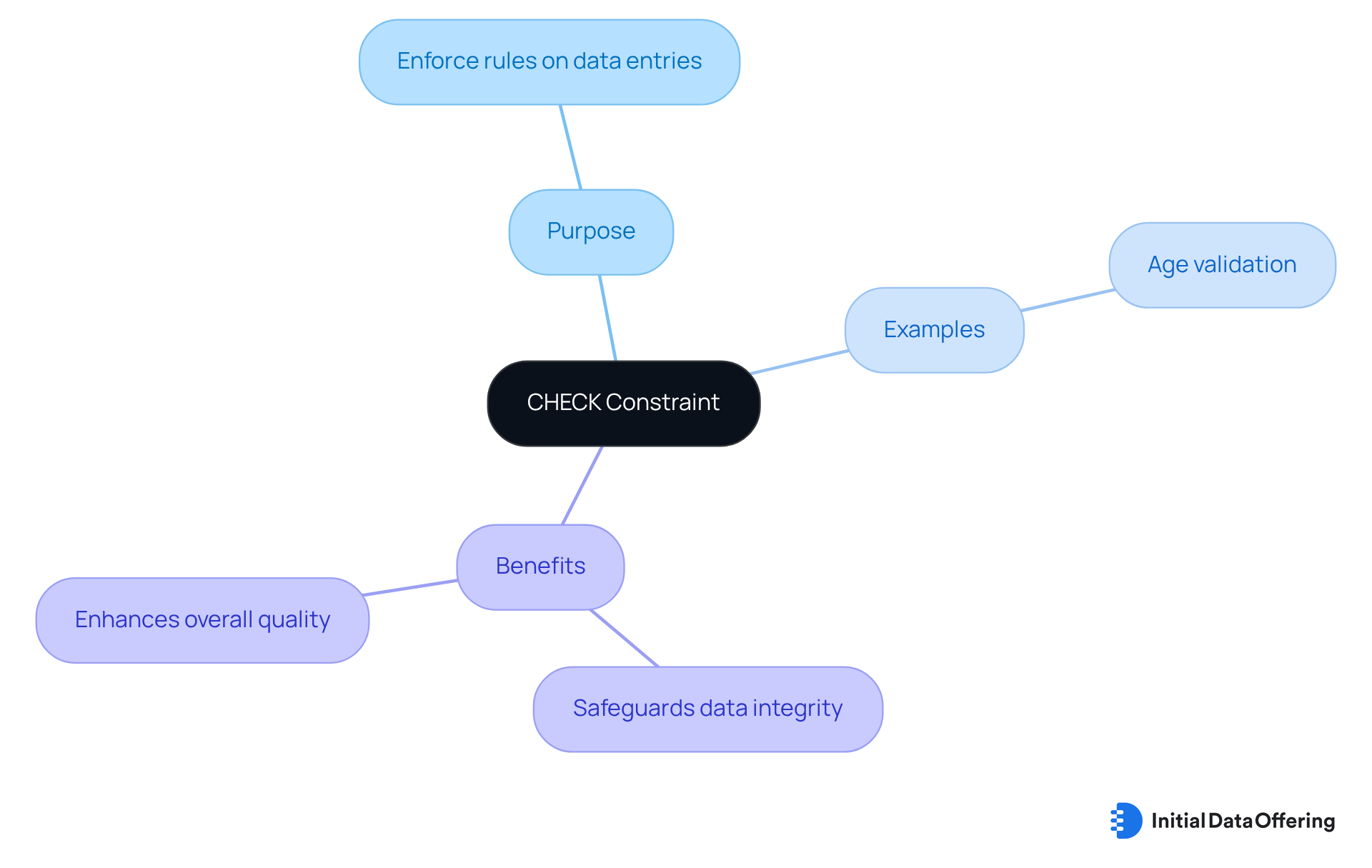

CHECK Constraint: Enforce Data Integrity Rules

The CHECK constraint serves as a vital feature in database management, designed to enforce specific rules on value entries within a table. For instance, when handling datasets that include age information, a CHECK constraint can effectively ensure that all entries fall within a valid range. This advantage not only safeguards data integrity but also enhances the overall quality of information. By implementing these constraints, individuals can reap the benefits of producing dependable insights from their collections.

How might these practices influence your approach to data management?

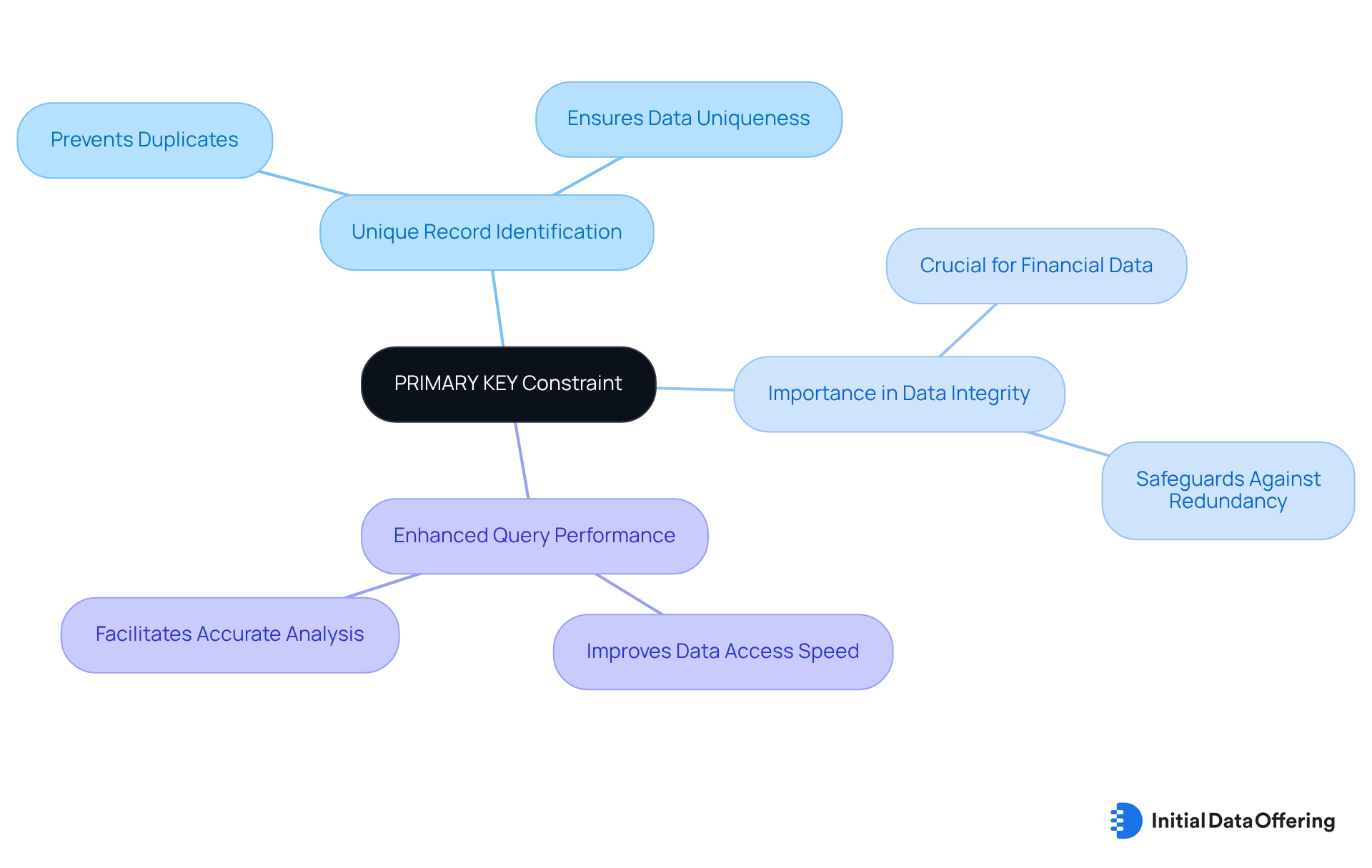

PRIMARY KEY Constraint: Ensure Unique Record Identification

The PRIMARY KEY constraint is a fundamental feature in database management that ensures each record within a table is unique, effectively preventing duplicate entries.

Why is this important? In contexts where precise identification of records is crucial—such as financial data analysis—the integrity of data collections becomes paramount. By establishing a primary key, individuals not only safeguard their data against redundancy but also enhance the efficiency of data queries.

This means that data can be accessed and analyzed with greater accuracy, leading to more reliable insights and decision-making. In summary, implementing a primary key is essential for maintaining data integrity and optimizing query performance.

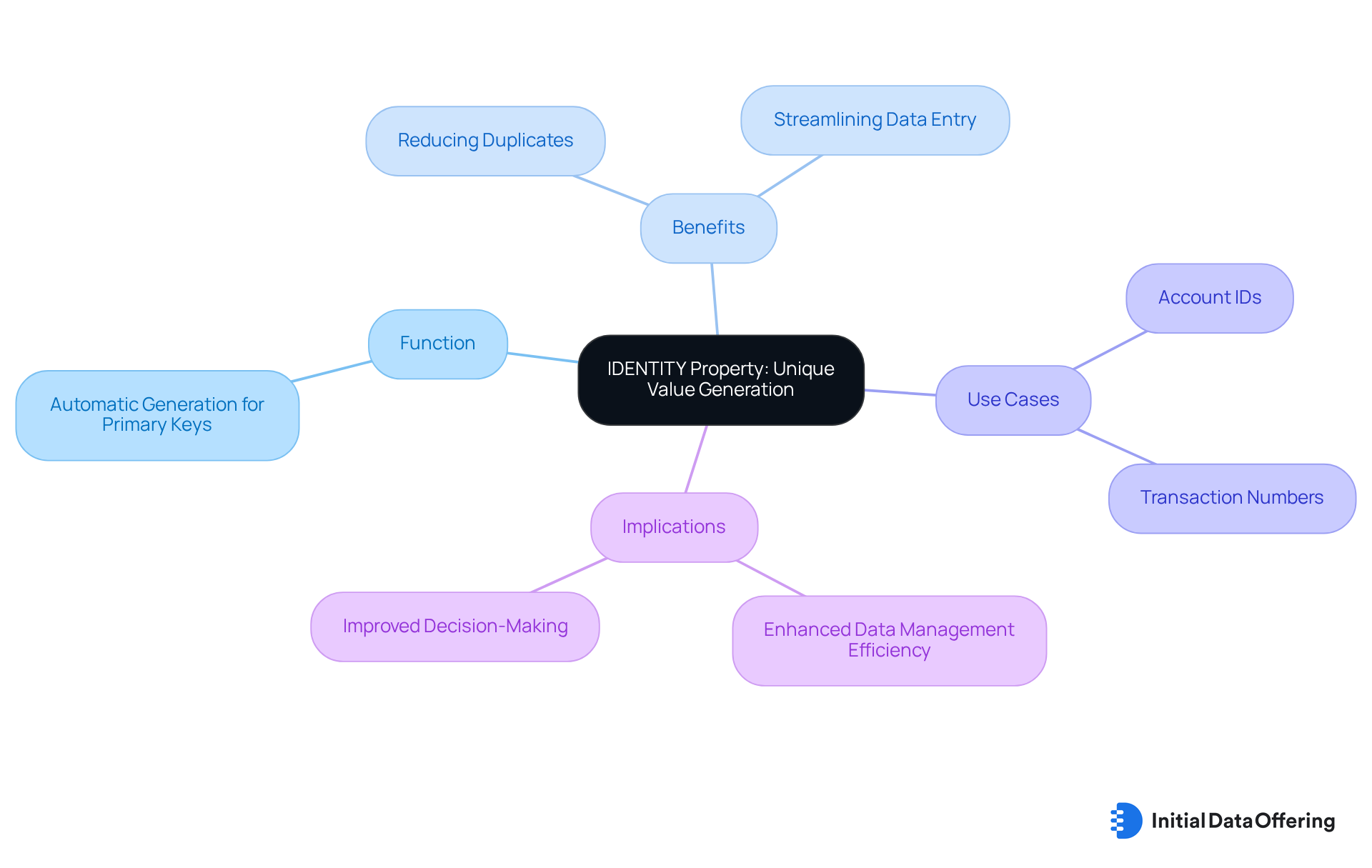

IDENTITY Property: Automatically Generate Unique Values

The IDENTITY property serves a crucial function by automatically generating unique values for a specified column in a table, which is particularly useful for primary keys. This feature is essential for datasets that necessitate distinctive identifiers, such as account IDs or transaction numbers. By leveraging the IDENTITY property, users can not only streamline their data entry processes but also significantly reduce the risk of encountering duplicate values.

Have you considered how this could enhance the efficiency of your data management tasks? Implementing this property can lead to more accurate and reliable datasets, ultimately supporting better decision-making.

Conclusion

Mastering the essential commands of Data Definition Language (DDL) is crucial for effective database management, particularly in the context of Initial Data Offerings (IDOs). These commands empower users to create, modify, and maintain well-structured datasets that are vital for accurate analysis and informed decision-making. By leveraging DDL commands, organizations can ensure their data remains relevant, accessible, and primed for analytical insights, thereby enhancing overall operational efficiency.

Throughout this article, key DDL commands such as:

- CREATE

- ALTER

- DROP

- TRUNCATE

- RENAME

- COMMENT

- CHECK

- PRIMARY KEY

- IDENTITY

have been explored in detail. Each command serves a distinct purpose, from establishing new database objects and modifying existing structures to enforcing data integrity and enhancing documentation. These functionalities underscore the importance of structured data management practices in today's data-driven landscape, where accurate and timely information is paramount for success.

As organizations continue to navigate the complexities of data management, embracing the capabilities offered by DDL commands is not just beneficial but essential. By adopting these practices, businesses can foster a culture of data literacy, streamline their operations, and ultimately make more informed decisions. The significance of mastering DDL cannot be overstated; it is a foundational element that supports the evolving needs of data management in an increasingly competitive environment.

Frequently Asked Questions

What is the purpose of DDL commands in Initial Data Offerings (IDO)?

DDL commands are essential tools for managing databases effectively, allowing users to create, modify, and oversee high-quality data collections, ensuring they are well-structured and accessible for analysis.

How does SavvyIQ's AI-driven data framework enhance dataset management?

SavvyIQ's AI-driven data framework, including its Recursive Data Engine, helps users maximize their datasets' potential, streamlining data operations and improving the quality of insights derived from data.

What is the CREATE command used for in database management?

The CREATE command is used to establish new database objects, such as tables, views, and indexes, allowing users to define the structure of a new dataset on the IDO platform.

Can you provide an example of using the CREATE command?

An example of using the CREATE command is: CREATE TABLE TikTokSentiment (id INT PRIMARY KEY, sentiment VARCHAR(255), timestamp DATETIME); which creates a structured table for TikTok Consumer Sentiment Data.

Why is the CREATE command important for database administrators?

The CREATE command is crucial as it lays the foundation for effective information management, promoting integrity and usability of datasets for analytics.

What are common uses of the ALTER TABLE command?

Common uses of the ALTER TABLE command include adding new columns, modifying data types, renaming tables, managing index operations, and changing table options to adapt to evolving data needs.

What challenges might arise when using the ALTER TABLE command?

ALTER TABLE operations can be slower for large tables because they involve creating a copy of the table, applying changes, and then swapping it back.

How does effective use of the ALTER TABLE command benefit organizations?

By leveraging the ALTER TABLE command, organizations can maintain robust data collections that support informed decision-making and strategic planning, ensuring datasets remain relevant and comprehensive.