10 Essential DDL Commands for Effective Database Management

10 Essential DDL Commands for Effective Database Management

Overview

The article titled "10 Essential DDL Commands for Effective Database Management" focuses on key Data Definition Language (DDL) commands that are crucial for managing and structuring databases effectively. These commands include:

- CREATE

- ALTER

- DROP

- TRUNCATE

- RENAME

- COMMENT

- CHECK

- PRIMARY KEY

- IDENTITY

Each command is accompanied by an explanation of its syntax and functionality, providing readers with a comprehensive understanding of how to implement these commands effectively. By following best practices associated with these commands, database managers can ensure efficient database management and maintain data integrity.

Why are these DDL commands so essential? They not only facilitate the creation and modification of database structures but also play a significant role in maintaining the overall health of the database. For instance, the CREATE command allows users to define new tables, while ALTER enables modifications to existing structures. DROP, TRUNCATE, and RENAME offer additional flexibility in managing database elements, each serving unique purposes that contribute to streamlined operations. Understanding the nuances of these commands empowers database professionals to make informed decisions, leading to improved efficiency and effectiveness in their work.

In conclusion, mastering these essential DDL commands equips database managers with the tools necessary for successful database management. By implementing the insights and best practices outlined in this article, professionals can enhance their database operations, ensuring both functionality and integrity are upheld.

Introduction

The landscape of database management is evolving rapidly, making effective data handling a cornerstone of organizational success. At the heart of this transformation are essential Data Definition Language (DDL) commands that empower users to create, modify, and maintain robust database structures. This article explores ten critical DDL commands, emphasizing their significance in enhancing database performance and ensuring data integrity.

As organizations increasingly rely on these commands, a pertinent question emerges: how can they navigate the complexities of DDL to optimize their data management strategies and avoid potential pitfalls? Understanding these commands is not just beneficial; it is essential for leveraging data effectively in today's dynamic environment.

Initial Data Offering: Streamline Your Data Management with Quality Datasets

The Initial Information Offering (IDO) acts as a pivotal centralized hub for information exchange, granting users access to high-quality datasets that significantly enhance database management efficiency. By meticulously curating unique datasets, IDO empowers businesses, researchers, and organizations to pinpoint the precise information essential for fostering innovation and strategic planning. This focus on quality not only aids in effective decision-making but also promotes a culture of informed growth.

Moreover, IDO's steadfast commitment to community involvement makes it a preferred choice for both information purchasers and vendors. This dynamic fosters a more streamlined and effective information management process, ultimately enhancing the overall accessibility and usability of valuable resources.

How might these datasets influence your strategic initiatives? Consider the potential impact on your projects and planning.

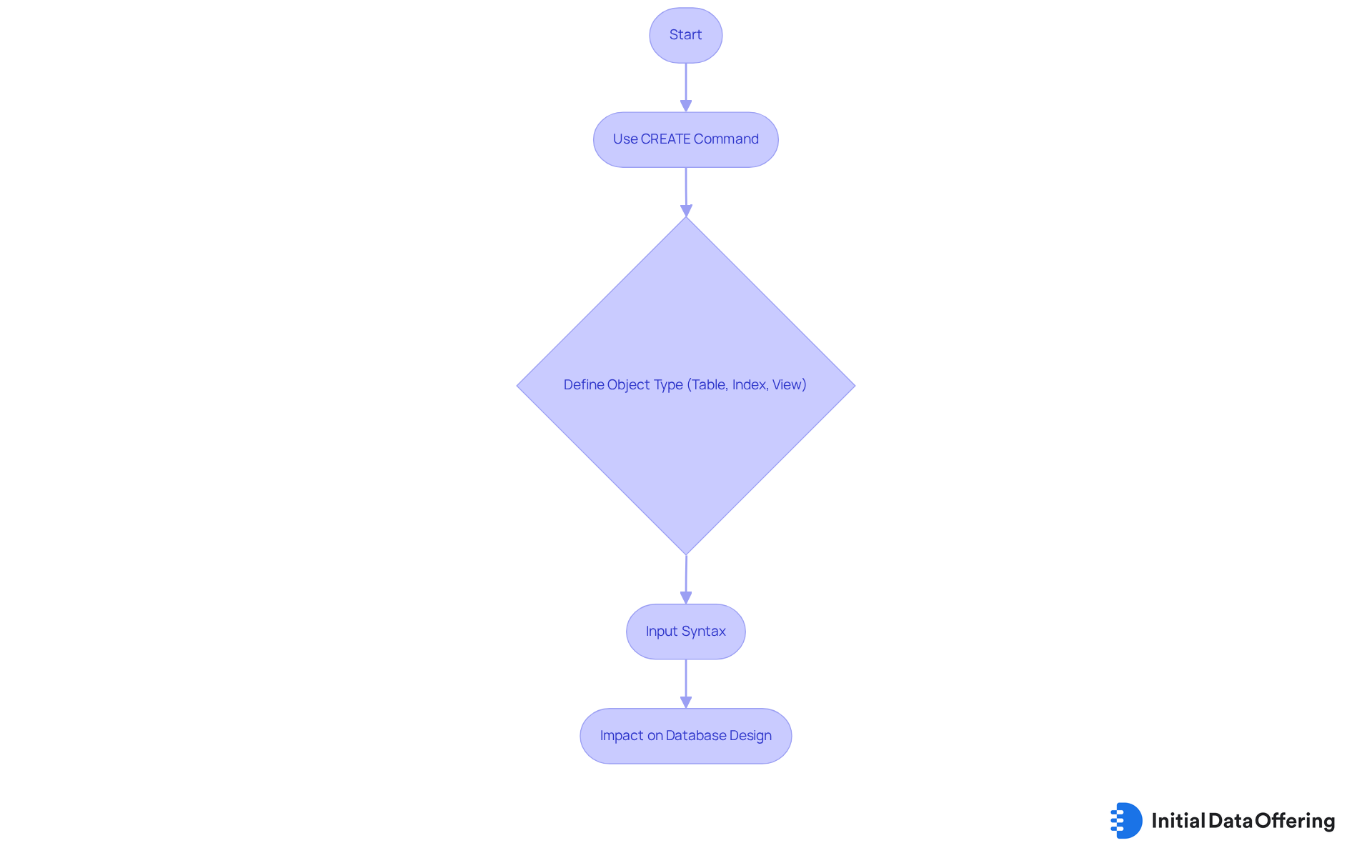

CREATE Command: Establish New Database Objects Efficiently

The CREATE instruction is essential as a DDL command for establishing new data structures within a database, including tables, indexes, and views. This command allows users to define the framework for how data will be organized and accessed. For instance, the syntax for creating a new table is as follows:

CREATE TABLE table_name (

column1 datatype,

column2 datatype,

...

);

By using this command, users can effectively lay the groundwork for their databases, ensuring that they can categorize and manage their data efficiently. How might this foundational step impact your database design? Understanding the CREATE instruction and DDL commands not only enhances your technical skills but also empowers you to build robust data structures that cater to your specific needs.

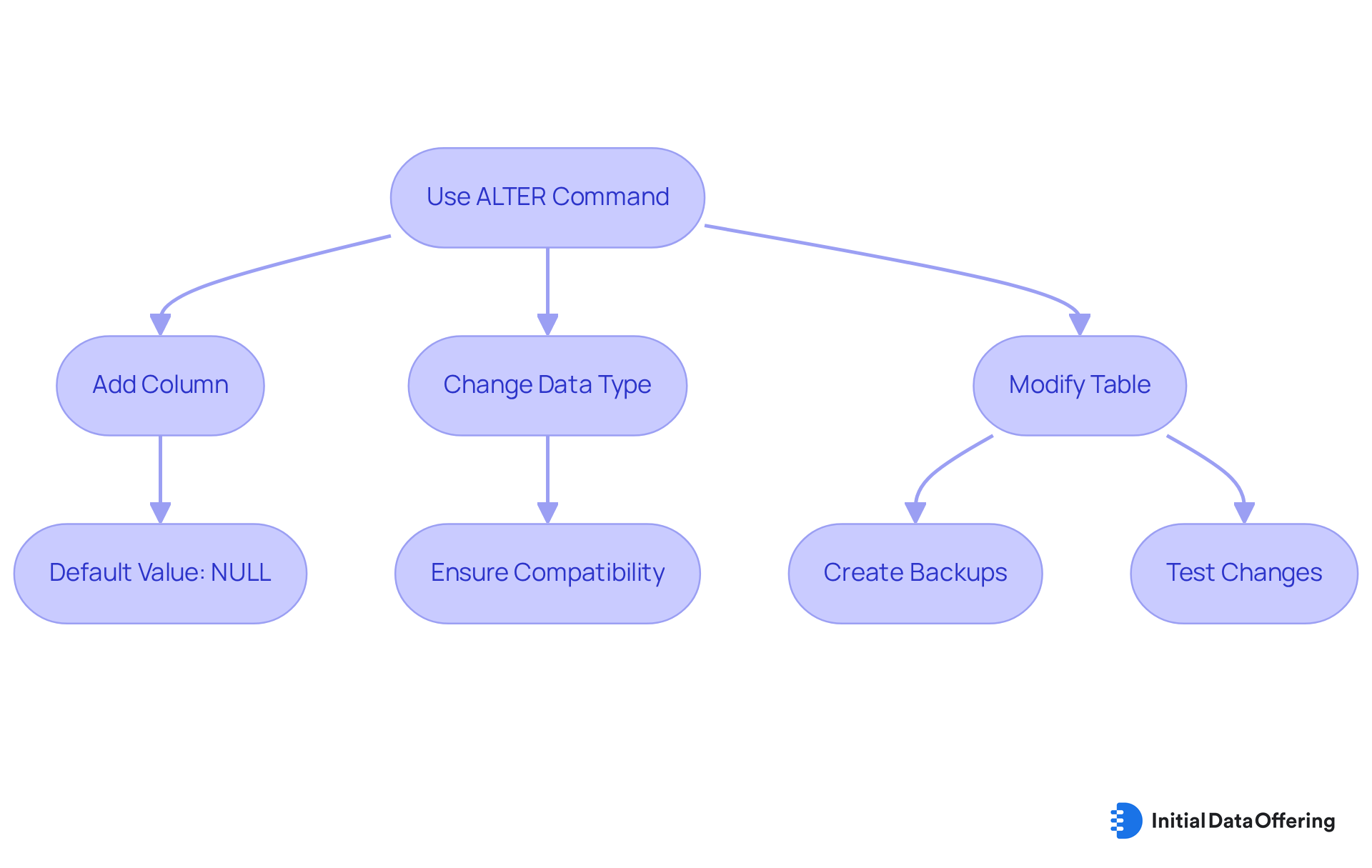

ALTER Command: Modify Existing Database Structures with Ease

The ALTER instruction, a type of DDL command, serves as a powerful tool that enables users to modify existing data structures, thereby facilitating adaptation to evolving data requirements. For instance, when adding a new column to an existing table, the syntax is as follows:

ALTER TABLE table_name

ADD column_name datatype;

Upon adding a new column via the ALTER command, all rows in that column are assigned a default value of NULL, an essential consideration for users. This instruction plays a critical role in maintaining the relevance and efficiency of a data repository. As organizations increasingly rely on the ALTER instruction, they can adjust their databases to meet new informational needs, such as incorporating additional metrics or adapting to changes in data types.

Database professionals emphasize the importance of understanding the implications of using the ALTER function. For example, changing a column's data type requires ensuring compatibility with existing content to avoid potential errors. Furthermore, modifying tables can result in index fragmentation, which necessitates ongoing maintenance to enhance performance. Best practices for utilizing DDL commands and the ALTER instruction include:

- Creating backups prior to modifications

- Employing transactions

- Testing changes within a development environment

By effectively leveraging the ALTER instruction, organizations can enhance the flexibility of their information systems, ensuring that their frameworks align with current operational requirements and data strategies. This adaptability is crucial for facilitating informed decision-making and promoting innovation across diverse sectors. As Amr Saafan aptly notes, "The SQL Server ALTER keyword is a versatile and powerful tool for modifying data structures," underscoring its significance in effective data management.

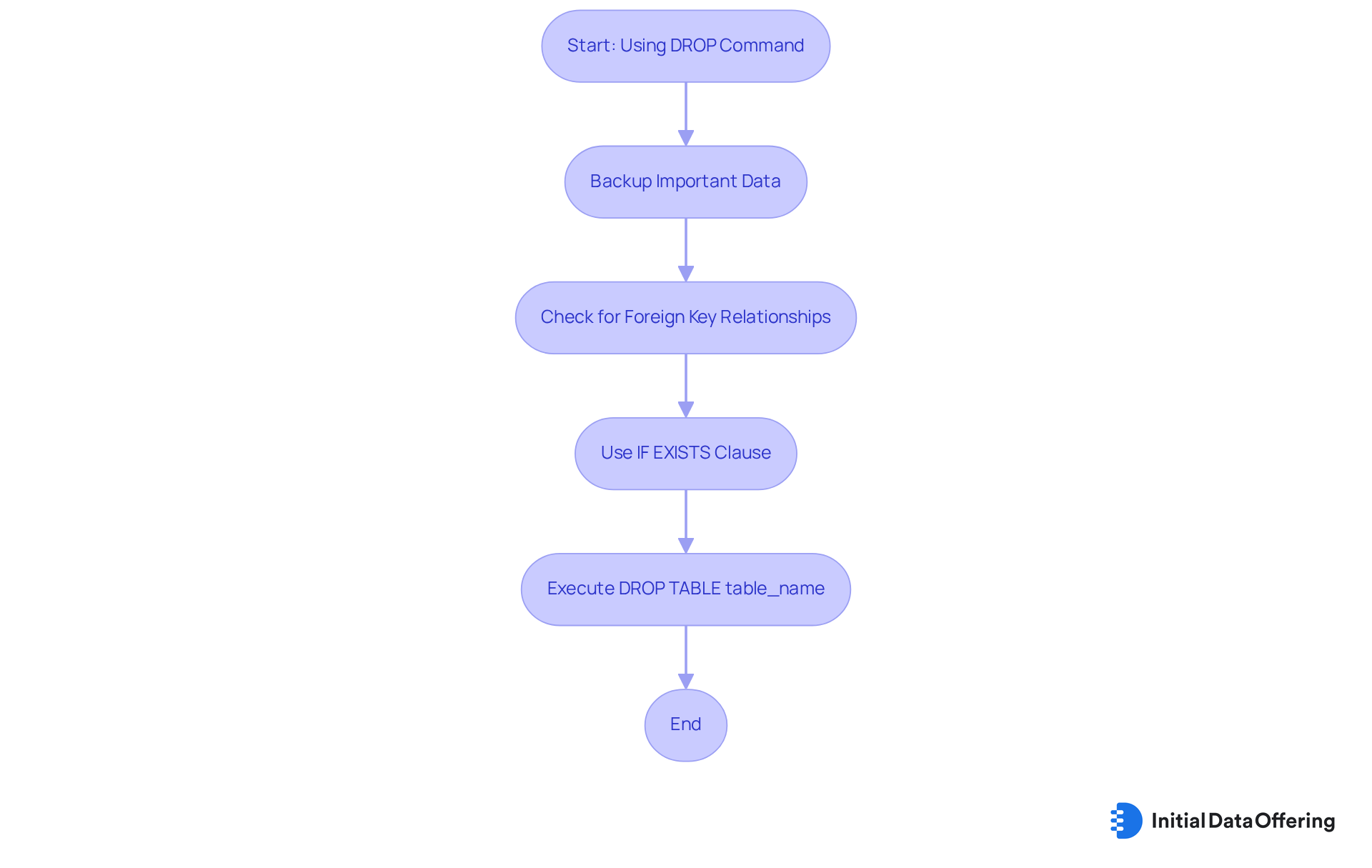

DROP Command: Safely Remove Unnecessary Database Objects

The DROP instruction is one of the DDL commands that serves as a fundamental tool in data management, designed to permanently eliminate data objects such as tables or indexes. The syntax for executing this command is straightforward:

DROP TABLE table_name;

However, this command must be employed with caution, as it irreversibly deletes the specified object along with all associated data. This can lead to significant data loss if not handled properly. For example, organizations that maintain well-structured databases often implement stringent protocols regarding the use of the DROP function to prevent accidental deletions. A notable instance involved a financial organization that lost essential transaction information due to a poorly executed DROP instruction, underscoring the importance of careful oversight.

Data handling experts emphasize the significance of safety when utilizing the DROP function. Best practices recommend:

- Backing up important data prior to executing the operation

- Verifying the existence of foreign key relationships to avoid cascading deletions

- Employing the 'IF EXISTS' clause to prevent errors when attempting to drop a non-existent table, thereby ensuring smoother operations

Understanding the distinctions among the DROP, TRUNCATE, and DELETE DDL commands is vital for effective data management. While DROP permanently removes an object, TRUNCATE swiftly eliminates all rows from a table without logging individual deletions, and DELETE allows for conditional removal of rows. This distinction is crucial for data administrators to make informed decisions based on their specific requirements.

The impact of the DROP instruction on data organization and performance is significant. When utilized correctly, it contributes to maintaining a tidy and efficient information structure, enhancing performance and simplifying data retrieval. Conversely, improper use can lead to disorganization and concerns regarding information integrity, making it essential for administrators to fully grasp the implications of this instruction. It is also important to drop referencing tables before referenced ones to uphold information integrity.

In summary, DDL commands like the DROP instruction are powerful yet potentially risky tools in data handling. By adhering to best practices and understanding its effects, organizations can effectively manage their databases while minimizing the risk of information loss. As Ben Richardson, a data management expert, states, "As a data-driven organization, you must optimize these instructions to handle your data, as they will significantly influence the success of your data strategy.

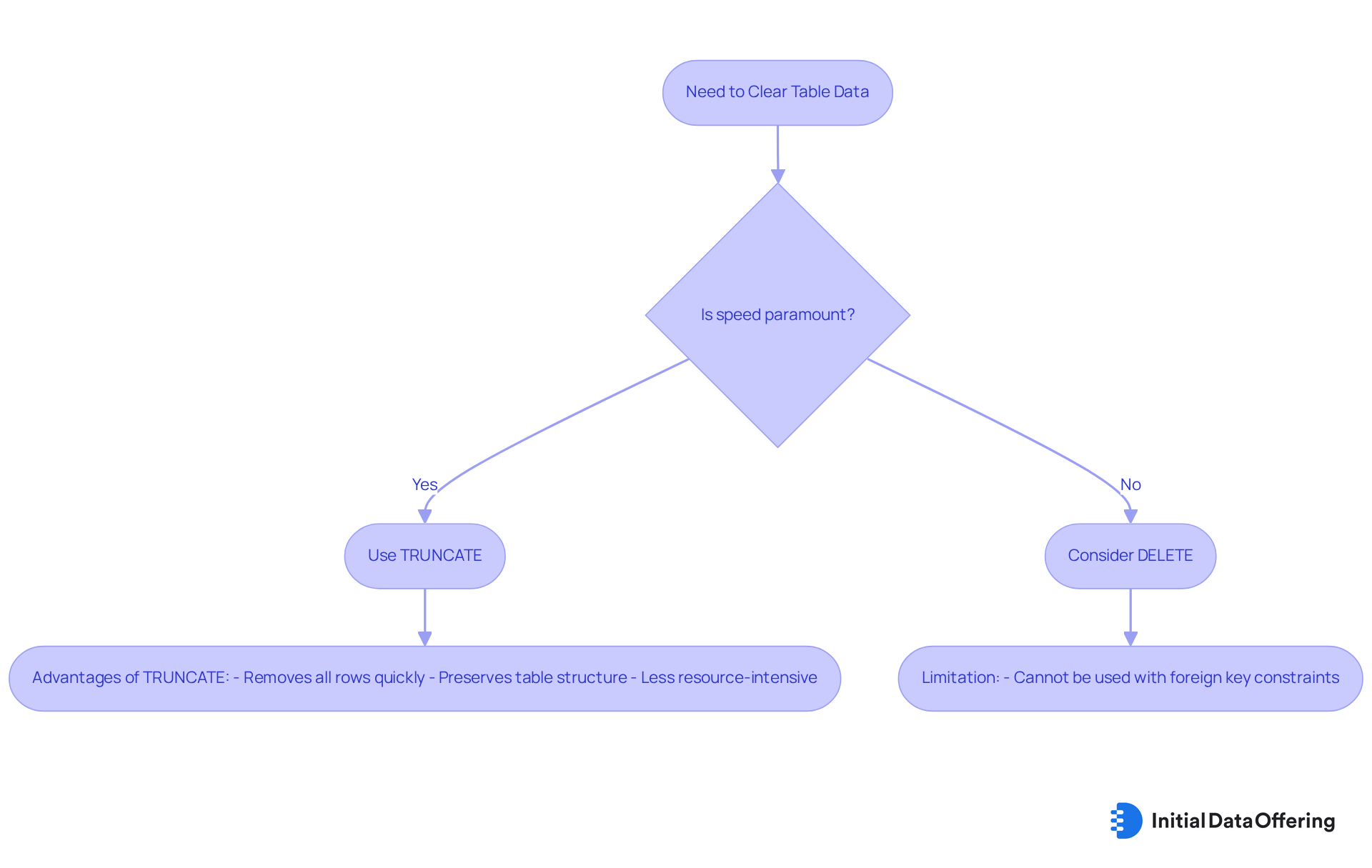

TRUNCATE Command: Efficiently Clear Table Data

The TRUNCATE function serves as a powerful tool among DDL commands designed to remove all rows from a table while preserving its structure. The syntax is straightforward:

TRUNCATE TABLE table_name;

This command proves particularly advantageous for large datasets, operating without the need to log individual row deletions, which significantly enhances performance. For instance, organizations managing extensive databases frequently utilize TRUNCATE to swiftly clear tables, especially when preparing for information reloading or restructuring.

Current trends indicate a growing preference for TRUNCATE in environments where speed is paramount, such as in information storage and analytics. Experts highlight that TRUNCATE can be executed rapidly, making it ideal for bulk information management tasks. Unlike DELETE, which can be slower due to its logging of each deletion, TRUNCATE minimizes resource usage by deallocating entire data pages, rendering it less resource-intensive.

Moreover, the TRUNCATE command, which is one of the ddl commands, is often recommended for situations requiring the removal of all records without the need for recovery options, as it does not support WHERE clauses and cannot be rolled back outside of a transaction. It is crucial to recognize that TRUNCATE cannot be applied to tables with foreign key constraints, a vital consideration for data managers. This efficiency positions TRUNCATE as a preferred choice for those aiming to optimize performance while managing large volumes of data. As Dexter Chu emphasizes, "Always ensure that truncating a table is the best approach for your needs, considering alternatives like DELETE where appropriate.

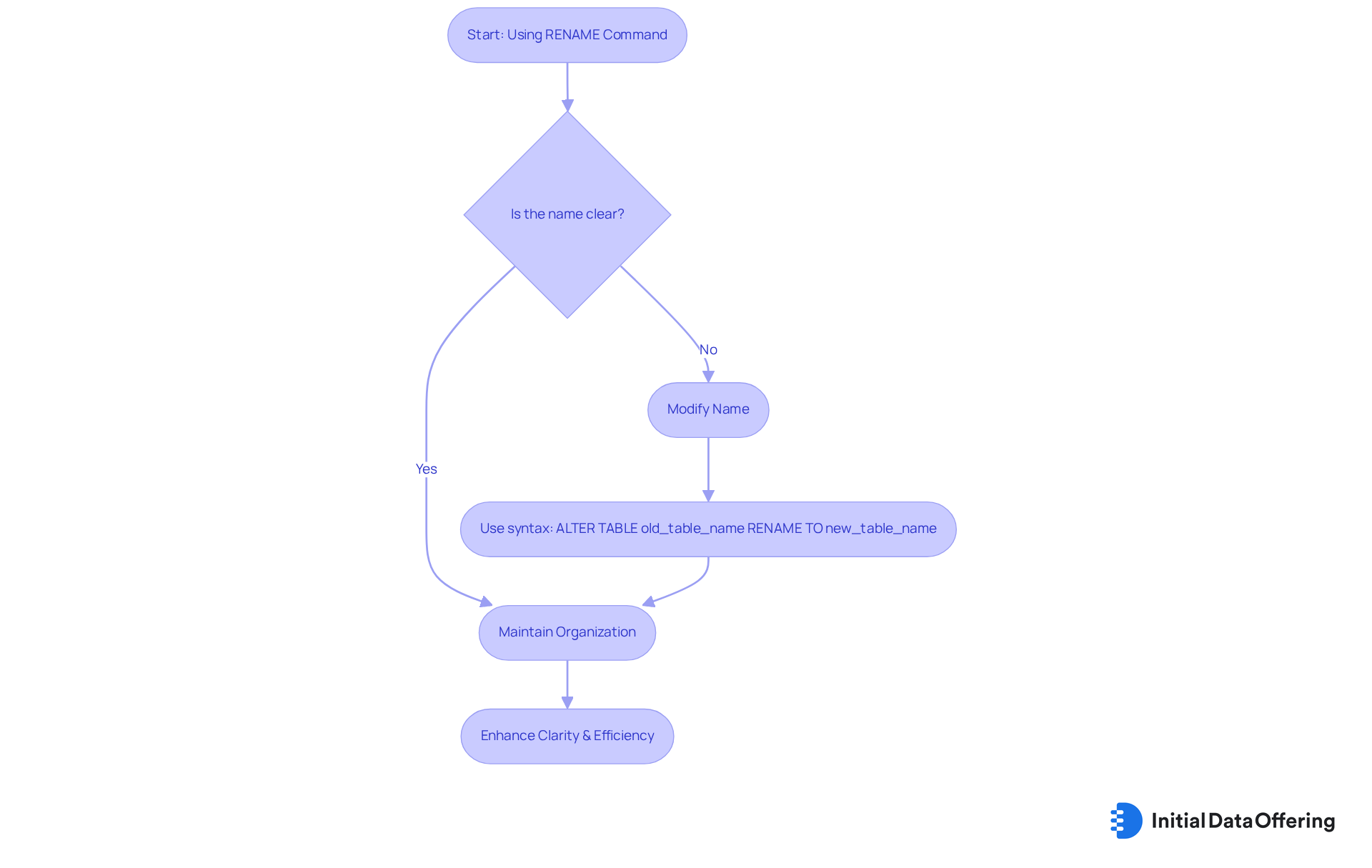

RENAME Command: Update Object Names for Better Clarity

The RENAME instruction serves as a powerful tool that enables users to modify the name of an existing data structure, thereby enhancing clarity and organization within the system. For instance, to rename a table, the syntax is as follows:

ALTER TABLE old_table_name RENAME TO new_table_name;

This command is vital for maintaining an organized database, especially as projects evolve and requirements change. Clear and consistent naming conventions are crucial for the efficient management of information systems, as they facilitate simpler navigation and comprehension of the structure.

Organizations that have effectively adopted the RENAME function report significant enhancements in their data structures. A prominent financial organization, for example, employed this directive to standardize naming conventions throughout its information system, leading to improved clarity for its data analysts and a reduction in errors during data retrieval.

Data management professionals emphasize the importance of maintaining organization in evolving projects. One expert noted, "Consistent naming conventions not only improve clarity but also foster collaboration among team members, making it easier to manage changes over time." By utilizing ddl commands such as the RENAME function, organizations can ensure their data systems remain intuitive and accessible, ultimately fostering improved decision-making and operational efficiency.

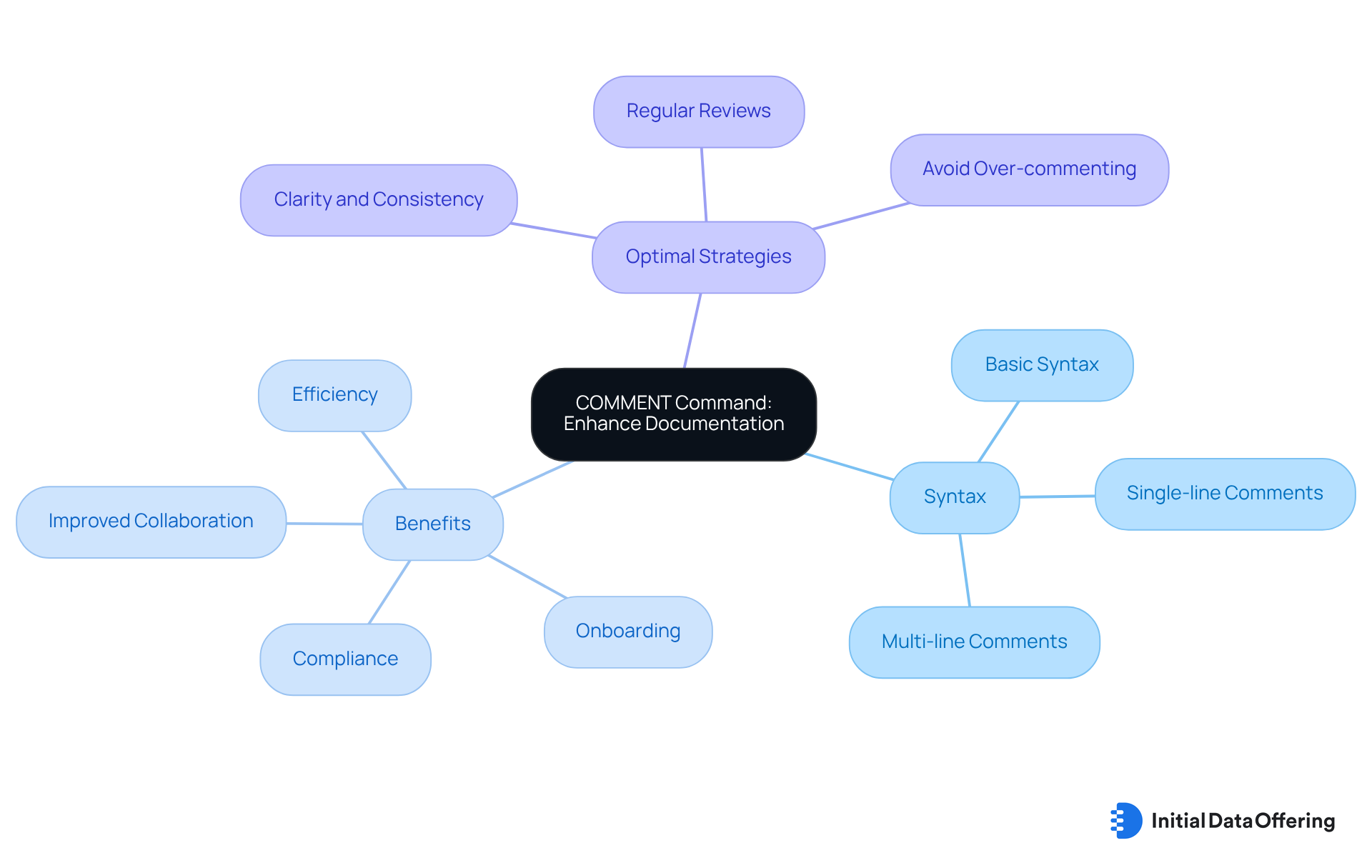

COMMENT Command: Enhance Documentation with Descriptive Notes

The COMMENT function serves as a powerful tool, enabling users to append descriptive notes to data objects, which significantly enhances the quality of documentation. The syntax for this command is straightforward:

COMMENT ON table_name IS 'Description';

This command is essential for ensuring that all team members possess a clear understanding of the database's purpose and structure. By providing context and clarifications directly within the schema, the COMMENT feature promotes improved collaboration among team members, thereby simplifying the ongoing maintenance and updates of the system.

Organizations that prioritize effective documentation through the COMMENT function often witness enhanced collaboration in their projects. For example, teams can onboard new members swiftly by utilizing well-documented schemas, which reduces the learning curve and minimizes potential misunderstandings. Moreover, clear documentation supports compliance and auditing processes, ensuring that all stakeholders are aligned with data governance practices.

As Antonello Zanini points out, "The content provided on dbvis.com/thetable, including but not limited to code and examples, is intended for educational and informational purposes only." This underscores the necessity of maintaining accurate and relevant documentation. Investing in comprehensive documentation not only conserves time and resources but also empowers teams to operate more efficiently. By integrating the COMMENT function into their workflows, organizations can cultivate a collaborative environment that fosters scalability and innovation in database management.

Optimal strategies for leveraging the COMMENT function include maintaining clarity and consistency in writing, as well as regularly reviewing and updating comments to prevent outdated information from causing confusion. Well-commented SQL code is inherently easier to maintain, reinforcing the argument for effective use of the COMMENT feature. By focusing on these aspects, organizations can elevate their documentation practices and nurture a culture of collaboration.

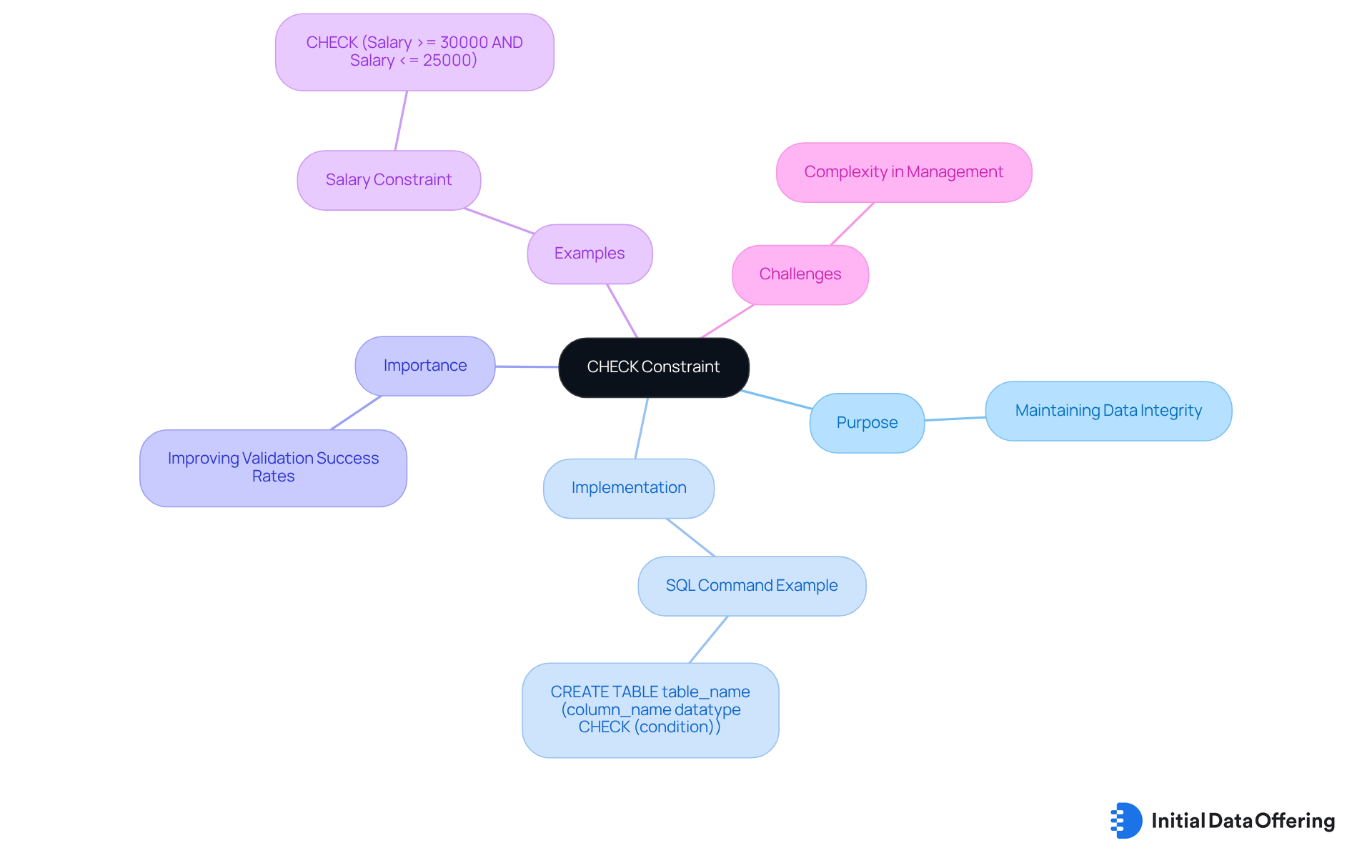

CHECK Constraint: Enforce Data Integrity with Validation Rules

The CHECK constraint serves as a vital tool for maintaining data integrity by restricting the values that can be entered into a column. For example, consider the following SQL command that demonstrates its implementation:

CREATE TABLE table_name (

column_name datatype CHECK (condition)

);

This constraint ensures that only valid data is accepted, thereby protecting the integrity of the database. By enforcing specific rules, CHECK constraints play a crucial role in maintaining consistent information quality across applications, significantly improving validation success rates. Organizations that effectively implement CHECK constraints can prevent invalid entries, which is essential for accurate reporting and informed decision-making.

For instance, a company might apply a CHECK constraint to ensure that employee salaries remain within a defined range. This could involve setting a constraint that requires the Salary to be greater than or equal to 30,000 while also capping it at less than or equal to 25,000—a situation that could lead to conflicts if not managed properly. Such practices simplify information management and reinforce the importance of integrity in system operations. As emphasized by data integrity specialists, "Implement SQL constraints for data integrity," highlighting that robust validation rules are crucial for maintaining high-quality datasets, ultimately resulting in improved outcomes in data-driven environments.

Moreover, CHECK constraints can enhance system performance by managing validation natively, reducing the need for checks on the application side. However, as the complexity of tables and conditions increases, managing CHECK constraints can become challenging, underscoring the necessity for careful planning and implementation.

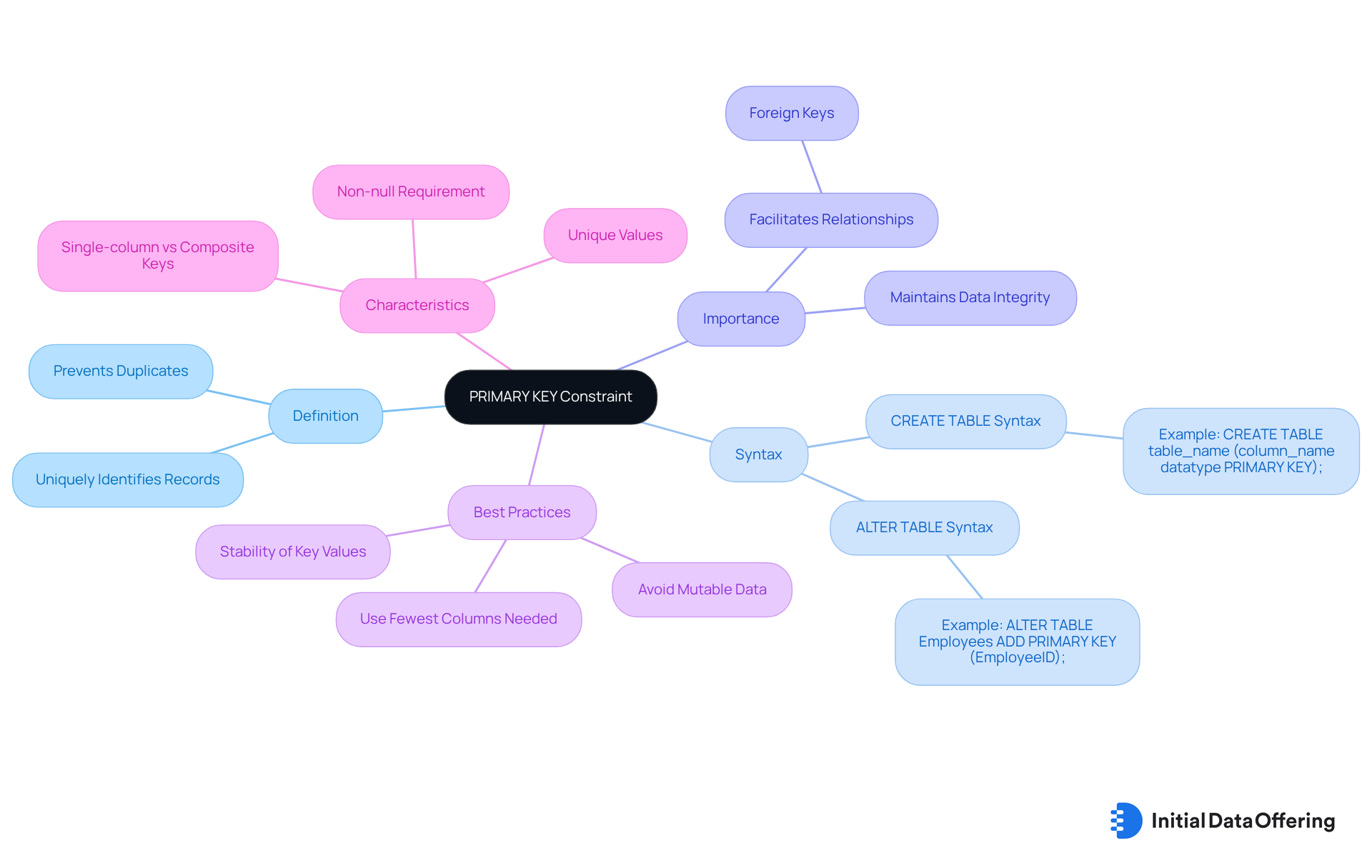

PRIMARY KEY Constraint: Ensure Unique Identification of Records

The PRIMARY KEY constraint is fundamental in relational databases, as it uniquely identifies each record within a table. The syntax for implementing this constraint is straightforward:

CREATE TABLE table_name (

column_name datatype PRIMARY KEY

);

This constraint guarantees that no two records can share the same value in the primary key column, which is crucial for maintaining data integrity. A primary key must contain unique values and cannot contain NULL values, ensuring that every record is distinct and identifiable. By enforcing uniqueness, the PRIMARY KEY constraint prevents duplicate entries, thereby enhancing the reliability of information retrieval and updates.

Organizations that prioritize information integrity often implement the PRIMARY KEY constraint as a best practice. For example, companies utilizing strong information management systems understand that a well-defined primary key not only simplifies operations but also aids in creating relationships between tables through foreign keys. This interconnectedness is essential for ensuring consistency across related information. In fact, every table should have a defined primary key to ensure information integrity.

Database managers stress that the uniqueness enforced by the PRIMARY KEY constraint is crucial for various operations, including information retrieval, updates, and deletions. By ensuring that each record is identifiable and non-null, organizations can effectively manage their information assets, driving better outcomes and informed decision-making. Additionally, primary keys can be single-column or composite, offering flexibility in data structure design. It is also important to avoid using mutable data as a primary key, as changes to such data can lead to complications in maintaining data integrity.

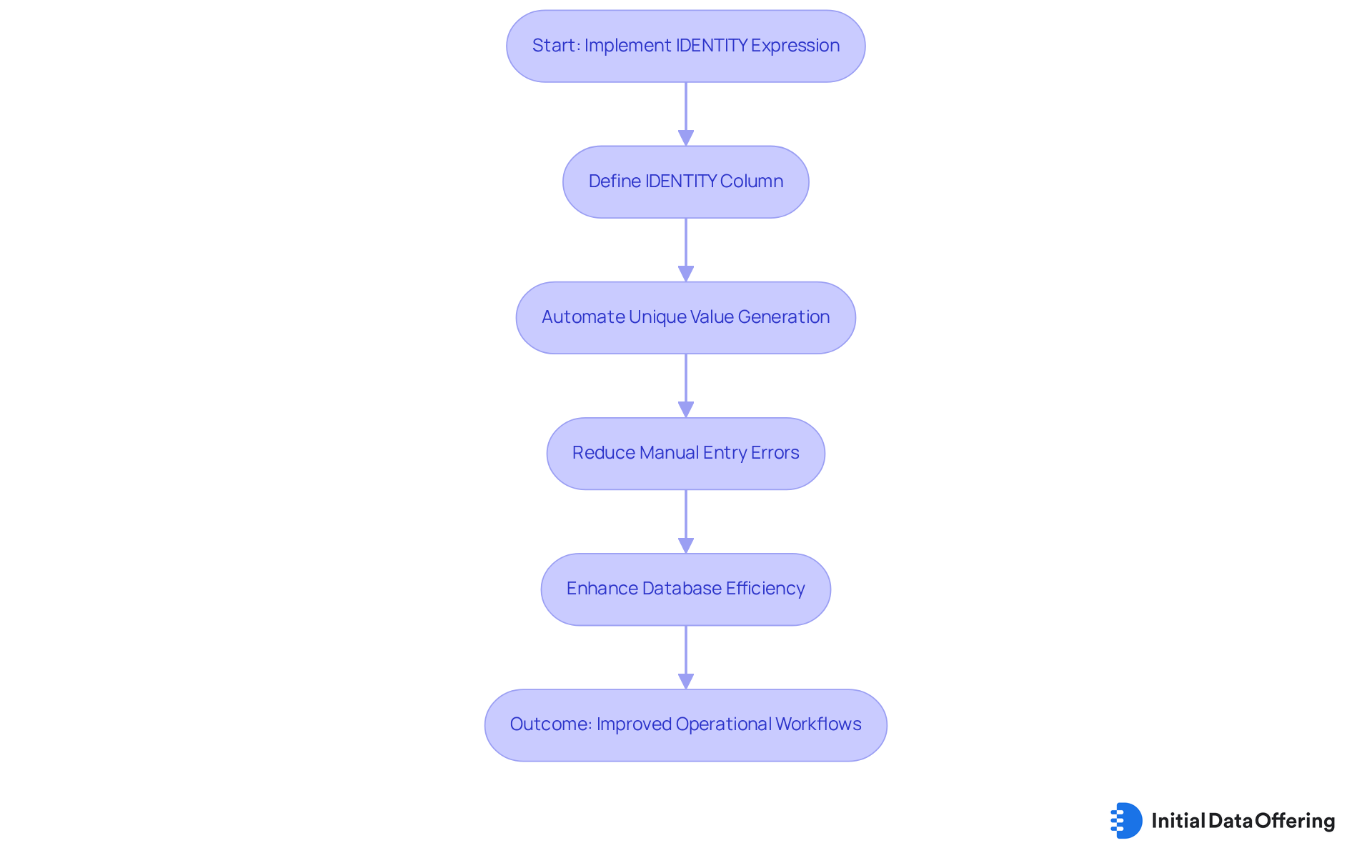

IDENTITY Expression: Automatically Generate Unique Values for New Records

The IDENTITY expression serves as a powerful tool in data handling, facilitating the automatic creation of unique values for new records. By defining a column with the IDENTITY property, users can streamline the process of adding entries, as each new record is assigned a unique identifier without the need for manual input. The syntax for implementing this feature is as follows:

CREATE TABLE table_name (

column_name INT IDENTITY(1,1)

);

This approach not only simplifies record addition but also enhances database efficiency by reducing potential errors associated with manual entry. Industry experts note that automating unique value generation significantly improves operational workflows, allowing organizations to focus on more strategic tasks. For instance, companies utilizing the IDENTITY expression have reported enhanced productivity and precision in their information handling processes.

Mirva Laatunen, an Investment Analyst, emphasizes that the potential of automation software is transforming data management practices. Recent trends indicate a growing adoption of automatic value generation techniques across various sectors. Organizations are progressively acknowledging the advantages of incorporating such features into their information systems, leading to more efficient information management and enhanced overall performance. The IDENTITY expression exemplifies how contemporary data management practices can streamline operations while ensuring data integrity.

To effectively implement the IDENTITY expression, consider reviewing case studies of organizations that have successfully adopted this feature. These examples can provide valuable insights into best practices and potential challenges. This proactive approach will help you leverage the full benefits of automatic unique value generation in your database management strategies.

Conclusion

Understanding and effectively implementing Data Definition Language (DDL) commands is crucial for optimal database management. These commands—CREATE, ALTER, DROP, TRUNCATE, RENAME, COMMENT, CHECK, PRIMARY KEY, and IDENTITY—serve as the backbone for structuring, modifying, and maintaining databases. By mastering these commands, organizations can enhance their data integrity, streamline operations, and support informed decision-making processes.

Throughout the article, key insights into each command's functionality and best practices have been highlighted. The CREATE command lays the foundation for data structures, establishing a robust framework. In contrast, the ALTER command allows for necessary modifications as business needs evolve, ensuring adaptability. The DROP and TRUNCATE commands facilitate efficient data management by enabling the safe removal of unnecessary objects, while the RENAME and COMMENT commands enhance clarity and documentation. Furthermore, constraints like CHECK and PRIMARY KEY ensure data quality and integrity, while the IDENTITY expression automates unique value generation, significantly improving operational efficiency.

Incorporating these DDL commands into database management strategies not only optimizes performance but also fosters a culture of informed growth and innovation. As organizations navigate an increasingly data-driven landscape, prioritizing the effective use of these commands will be instrumental in achieving robust data management and supporting strategic initiatives. By embracing these tools, organizations can enhance their database systems, ensuring they remain agile, efficient, and aligned with their goals.

Frequently Asked Questions

What is the Initial Data Offering (IDO)?

The Initial Data Offering (IDO) is a centralized hub for information exchange that provides access to high-quality datasets, enhancing database management efficiency for businesses, researchers, and organizations.

How does IDO benefit users?

IDO helps users pinpoint essential information for innovation and strategic planning, aids in effective decision-making, and promotes a culture of informed growth through its focus on quality datasets.

What is the CREATE command in database management?

The CREATE command is a Data Definition Language (DDL) command used to establish new data structures within a database, such as tables, indexes, and views.

What is the syntax for creating a new table using the CREATE command?

The syntax for creating a new table is: CREATE TABLE table_name ( column1 datatype, column2 datatype, ... );

What is the purpose of the ALTER command in database management?

The ALTER command is a DDL command that allows users to modify existing data structures, facilitating adaptation to evolving data requirements.

How do you add a new column to an existing table using the ALTER command?

To add a new column, the syntax is: ALTER TABLE table_name ADD column_name datatype;

What happens to new columns added via the ALTER command?

All rows in the newly added column are assigned a default value of NULL.

What are some best practices for using the ALTER command?

Best practices include creating backups prior to modifications, employing transactions, and testing changes within a development environment.

Why is understanding the ALTER command important for database professionals?

Understanding the ALTER command is crucial for ensuring compatibility with existing content, avoiding potential errors, and maintaining the relevance and efficiency of a data repository.